CUDA Spotlight: GPUs for Signal Processing in MATLAB

This week's Spotlight is on Brian Fanous of MathWorks. Brian is a senior engineer in the signal processing and communications group.

MathWorks is the leading developer of technical computing software, most well-known for the MATLAB® and Simulink® products.

This interview is part of the CUDA Spotlight Series.

Q & A with Brian Fanous

NVIDIA: Brian, what is your role at MathWorks?

Brian: I am an engineer in the signal processing area at MathWorks. I am responsible for GPU acceleration of the various products in that area.

NVIDIA: What trends are you seeing in signal processing and communications?

Brian: One of the main things we are seeing in all areas is increased complexity. Current FPGAs, DSPs, and GPUs have enough compute power that engineers can solve much bigger problems much faster than we could previously. That’s a bit general, but it means that theoretical algorithms people came up with 30 years ago are now feasible to deploy. We see this in a variety of signal processing areas.

“Software in everything” is another trend we see and talk about at the MathWorks. Those complex algorithms are everywhere – in smartphones, automobile collision avoidance systems, medical equipment, etc. That’s in part because computational power available to engineers has increased, but these systems have become very power efficient.

NVIDIA: What sort of signal processing problems can be solved with MATLAB?

Brian: MATLAB is used in a wide range of signal processing areas. We provide toolboxes that extend MATLAB to enable more productive development in different areas. For example:

- Communications System Toolbox ™ allows engineers to simulate wireless communication systems at the physical layer and evaluate system performance.

- Phased Array System Toolbox™ facilitates sensor array design and system simulation. It can model things like radar for UAV (unmanned aerial vehicle) design, sonar and other array processing systems.

- Computer Vision System Toolbox™ enables the development of vision processing algorithms for tasks including object detection, recognition and tracking.

- Supporting each of these are DSP System Toolbox™ and Signal Processing Toolbox™ which are the building blocks for signal processing design in application areas as far ranging as audio, medical and finance.

NVIDIA:How are you using GPUs to accelerate the algorithms?

Brian: We've enabled GPU computing in three of the toolboxes: Communications System Toolbox, Phased Array System Toolbox, and Signal Processing Toolbox.

This support relies on the Parallel Computing Toolbox™, which equips MATLAB with GPU support for basic math and linear algebra. These toolboxes provide GPU acceleration of complex and time-consuming algorithms such as Turbo decoding for wireless communications, clutter modeling for radar, and cross correlation for signal processing. The toolboxes use CUDA and other tools to accelerate computations on NVIDIA GPUs.

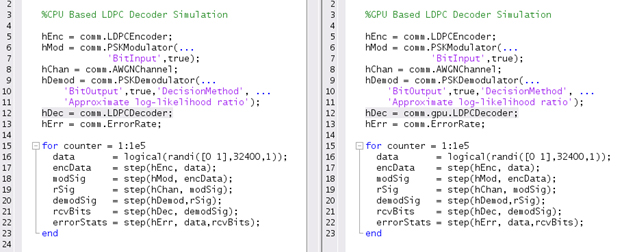

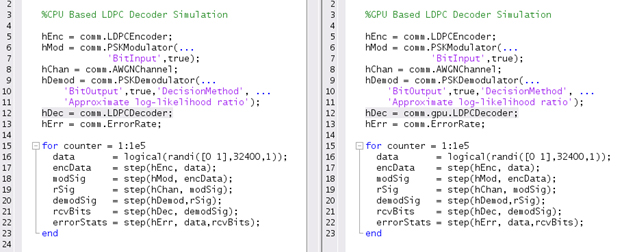

Generally just a few lines of MATLAB code need to be changed to enable GPU acceleration. We've seen speedups of 30x for wireless simulation and over 100x for radar systems.

NVIDIA: Why is GPU computing a good fit for radar simulations?

Brian: As an example, one of the more computationally intense problems in radar system simulation is modeling clutter, which is the noise in the received signal that results from undesirable ground reflections. This kind of computational problem proved to be a great fit for GPUs.

In a clutter simulation, ground reflections are modeled using a grid of patches between each combination of transmit and receive antennas. Increasing the number of antennas, or increasing the simulation precision with more ground patches, results in more computation.

So there is a huge amount of parallelism available in this problem. Running a high precision simulation on a CPU is very slow. We have successfully accelerated both small- and large-scale clutter simulations using NVIDIA GPUs. Simulating these systems in minutes or seconds rather than days allows radar engineers to focus on their efforts on design rather than simulation strategies.

GPUs are so well suited to radar that some companies have begun deploying radar systems running GPUs. In that case, the GPUs are not just part of the simulator, they are the actual system.

NVIDIA: How is MATLAB used in the wireless industry?

Brian: Modern communications systems like 4G LTE use very complex encoding and decoding algorithms to allow a wireless system to tolerate noise. An engineer may need to simulate how a system's bit error rate (BER), a standard measure of performance for data communication, degrades under increasing levels of noise.

Communications System Toolbox enables an engineer to quickly model wireless systems to run these kinds of BER simulations. The analysis requires Monte Carlo simulations with high computational accuracy to model the error rate. Simulation can take days or even weeks on a CPU.

We've enabled some of the most computationally-intensive parts of a communications system, such as the Turbo, LDPC and Viterbi decoders, to run on the GPU. We've achieved significant acceleration while returning the same answers as obtained from a CPU-based simulation.

NVIDIA: How will MathWorks continue to leverage GPUs in the future?

Brian: Building out a total GPU solution is important to MathWorks and we've benefited from great support from NVIDIA.

In the signal processing area we'll continue to improve our offerings in the Communications and Phased Array System toolboxes and expand to other toolboxes.

Outside of signal processing, other toolboxes have begun adding GPU support, including Neural Networks Toolbox™.

We want to put the benefits of GPU computing into the hands of domain experts – the researchers, scientist, and engineers who are used to working in MATLAB. The goal is to allow them to speed their product design or research while focusing on their areas of expertise.

Bio for Brian Fanous

Brian Fanous is a senior engineer in the Signal Processing and Communications area at MathWorks. He is the primary developer of the GPU support for toolboxes in that area. Brian previously worked on the HDL Coder product where he developed HDL IP. He received his M.S. in Electrical Engineering from the University of California, Berkeley. Prior to joining the MathWorks, Brian worked on FPGA and ASIC development in the wireless and radio astronomy areas.

Relevant Links

GPU Computing with MATLAB: www.mathworks.com/discovery/matlab-gpu.html

GPU Programming in MATLAB: www.mathworks.com/company/newsletters/articles/gpu-programming-in-matlab.html

Signal Processing Toolbox: http://www.mathworks.com/products/signal/

Communications System Toolbox: http://www.mathworks.com/products/communications/

Phased Array System Toolbox: http://www.mathworks.com/products/phased-array/

Contact Info

Brian.Fanous (at) mathworks (dot) com