CUDA Spotlight: Valerie Halyo By Calisa Cole, posted July 22, 2013 GPU Enhancement of the Trigger to Extend Physics Reach at the LHCThis week's Spotlight is on Valerie Halyo, assistant professor of physics at Princeton University. Researchers in the field of high energy physics are exploring the most fundamental questions about the nature of the universe, looking for the elementary particles that constitute matter and its interactions. At Princeton, Valerie is leading efforts to look for resonances decaying to long-lived neutral particles which in turn decay to leptonic or heavy-flavor hadronic channels. For certain parameter ranges of the long-lived particles or resonances masses, or lifetimes of the long-lived particles, these particles could have gone undetected in previous experiments. One of Valerie’s goals is to extend the physics accessible at the Large Hadron Collider (LHC) at CERN in Geneva, Switzerland to include a larger phase space of the topologies that include long-lived particles. This research goes hand in hand with new ideas related to enhancing the "trigger" performance at the LHC. Valerie works closely with colleagues Adam Hunt, Pratima Jindal, Paul Lujan and Patrick LeGresley. This interview is part of the CUDA Spotlight Series. Q & A with Valerie HalyoNVIDIA: Valerie, what are some of the important science questions in the study of the universe?

NVIDIA: In layman's terms, what is the Large Hadron Collider (LHC)? Inside the accelerator, two high-energy proton beams travel in opposite directions close to the speed of light before they are made to collide at four locations around the accelerator ring. These locations correspond to the positions of four particle detectors – ATLAS, CMS, ALICE and LHCb. These detectors record the particles left by debris from the collisions and look for possible new particles or interactions. LHC is the best microscope able to probe new physics at 10-19 m and is also the best "time machine," creating conditions similar to what happened a picosecond after the Big Bang at temperatures 8 orders of magnitude larger than the core of the Sun. Hence, the LHC is a discovery machine that provides us the opportunity to explore the physics hidden in the multi TeV region.

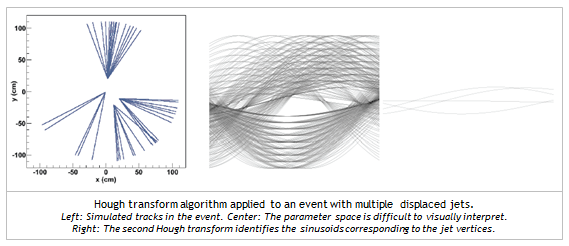

NVIDIA: How can GPUs accelerate research in this field? (In particle physics, a trigger is a system that rapidly decides which events in a particle detector to keep when only a small fraction of the total can be recorded.) The Level-1 trigger is based on custom hardware and designed to reduce the rate to about 100 kHz, corresponding to 100 GB/s, assuming an average event size of 1 MB. The High Level Trigger (HLT) is purely software-based and must achieve the remaining rate reduction by executing sophisticated offline-quality algorithms. GPUs can easily be integrated in the HLT server farm and allow, for example, simultaneous processing of all the data recorded by the silicon tracker as particles traverse the tracker system. The GPUs will process the data at an input rate of 100 kHz and output all the necessary information about the speed and direction of the particles for up to 5000 particles in less than 60 msec. This is more than expected even at design luminosity. This will allow for the first time not only the identification of particles emanating from the interaction point but also reconstruction of the trajectories for long-lived particles. It will enhance and extend the physics reach by improving the set of events selected and recorded by the trigger. While both CPUs and GPUs are parallel processors, CPUs are more general purpose and designed to handle a wide range of workloads like running an operating system, web browsers, word processors, etc. GPUs are massively parallel processors that are more specialized, and hence efficient, for compute-intensive workloads. NVIDIA: When did you first realize that GPUs were interesting for high-level triggering? It is essentially the “brain of the experiment." Wrong decisions may cause certain topologies to evade detection forever. Realizing the significant role of the trigger, the current window of opportunity we have during the two years of the LHC shutdown, and the responsibility to enhance or even extend the physics reach at the LHC after the shutdown, led me to revisit the software-based trigger system. By its very nature of being a computing system, the HLT relies on technologies that have evolved extremely rapidly. It is no longer possible to rely solely on increases in processor clock speed as a means for extracting additional computational power from existing architectures. However, it is evident that innovations in parallel processing could accelerate the algorithms used in the trigger system and might yield significant impact. One of the most interesting technologies, which continue to see exponential growth, is modern GPUs. By making a massive number of parallel execution units highly programmable for general purpose computing, and the supported API (CUDA C), it was obvious that putting this together with the flexibility of the CMS HLT software and hardware computing farm could enhance the HLT performance and potentially extend the reach of the physics at the LHC. NVIDIA: What approaches did you find the most useful for CUDA development? In terms of GPU performance it's a continual process of implementing, understanding the performance in terms of the capability of the hardware, and then refactoring to alleviate bottlenecks and maximize performance. Understanding the performance and then refactoring the code depends on knowledge of the hardware and programming model, so documentation about details of the architecture and tools like the Profiler are very useful. As a specific example, the presentation at GTC 2013 by Lars Nyland and Stephen Jones on atomic memory operations was especially useful in understanding the performance of some of our code. It was a particularly good session and I’d recommend watching it online if you weren’t able to attend. NVIDIA: Describe the system you are running on. NVIDIA: Is your work in the research stage? What's the next step?

NVIDIA: Beyond triggering, how do you envision GPUs helping to process or analyze LHC data, or to accelerate simulation? In addition, experiments have to deal with massive production of Monte Carlo data each year. Billions of events have to be generated to match the running conditions of the collision and detector while data was taken. Improvements in the speed of reconstruction allow higher production rates and better efficiency of the computing resources, while also saving money. NVIDIA: What advice would you offer others in your field looking at CUDA? A great algorithm design isn't very useful if it can't be implemented in an efficient way on modern parallel processors. Parallel processor hardware is all around us in the form of GPUs and multicore CPUs, and there are many online resources to help you get started. Acknowledgments: Valerie would like to thank her collaborators Adam Hunt, Pratima Jindal and Paul Lujan for their important and significant contributions to the project and especially Patrick LeGresley for his enthusiasm, insight and fruitful discussions and contributions throughout this project. Bio for Valerie HalyoValerie Halyo has been assistant professor at Princeton since Feb. 2006. Until the end of 2010 she served as co-manager of the CMS Luminosity project. She led the effort and played a crucial role from the system's inception to actual delivery of luminosity values including the normalization and the optimization of the luminosity with LHC to the CMS collaboration. The main accomplishments include the design of the online/offline readout luminosity systems, the quantitative analysis of the test beam/collision data, and the development of methods used for extracting the instantaneous luminosity. The current sole online relative luminosity system is still based on the Forward Calorimeter she developed with her group. She was elected as convener of the di-lepton topology group in the USCMS at the LHC for two years. She helped develop the technical infrastructure common to various di-lepton analyses which had to be ready before the LHC collisions. Currently, Valerie is leading new analysis efforts to look for long-lived particles decaying either leptonically or hadronically. This effort goes hand in hand with new ideas of enhancing the trigger performance at the LHC. The integration of this new technology will extend the scope of the physics reach at the LHC and in addition, serve to empower students and postdocs with new cutting edge skills. Education: Appointments: Awards: Relevant Links Contact Info |