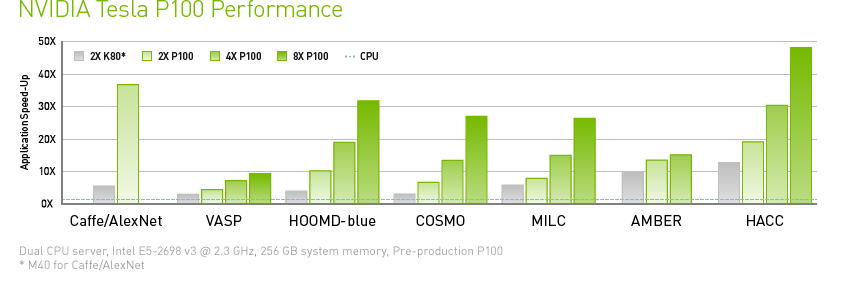

With over 600 HPC applications accelerated—including 15 out of the top 15—and all deep learning frameworks, Tesla P100 with NVIDIA NVLink delivers up to a 50X performance boost.

NVIDIA Tesla P100

The World's First AI Supercomputing Data Center GPU

Today's data centers rely on many interconnected commodity compute nodes, which limits high performance computing (HPC) and hyperscale workloads. NVIDIA® Tesla® P100 taps into NVIDIA Pascal™ GPU architecture to deliver a unified platform for accelerating both HPC and AI, dramatically increasing throughput while also reducing costs.

With over 600 HPC applications accelerated—including 15 out of the top 15—and all deep learning frameworks, Tesla P100 with NVIDIA NVLink delivers up to a 50X performance boost.

Tesla P100 is reimagined from silicon to software, crafted with innovation at every level. Each groundbreaking technology delivers a dramatic jump in performance to inspire the creation of the world's fastest compute node.

The NVIDIA Pascal architecture enables the Tesla P100 to deliver superior performance for HPC and hyperscale workloads. With more than 21 teraFLOPS of 16-bit floating-point (FP16) performance, Pascal is optimized to drive exciting new possibilities in deep learning applications. Pascal also delivers over 5 and 10 teraFLOPS of double- and single-precision performance for HPC workloads.

Tesla P100 tightly integrates compute and data on the same package by adding chip-on-wafer-on-substrate (CoWoS) with HBM2 technology to deliver 3X more memory performance over the NVIDIA Maxwell™ architecture. This provides a generational leap in time to solution for data-intensive applications.

Performance is often throttled by the interconnect. The revolutionary NVIDIA NVLink high-speed, bidirectional interconnect is designed to scale applications across multiple GPUs by delivering 5X higher performance compared to today's best-in-class technology.

Note: This technology is not available in Tesla P100 for PCIe.

Page Migration Engine frees developers to focus more on tuning for computing performance and less on managing data movement. Applications can now scale beyond the GPU's physical memory size to virtually limitless amounts of memory.

| P100 for PCIe-Based Servers | P100 for NVLink-Optimized Servers | |

|---|---|---|

| Double-Precision Performance | 4.7 teraFLOPS | 5.3 teraFLOPS |

| Single-Precision Performance | 9.3 teraFLOPS | 10.6 teraFLOPS |

| Half-Precision Performance | 18.7 teraFLOPS | 21.2 teraFLOPS |

| NVIDIA NVLink Interconnect Bandwidth | - | 160 GB/s |

| PCIe x16 Interconnect Bandwidth | 32 GB/s | 32 GB/s |

| CoWoS HBM2 Stacked Memory Capacity | 16 GB or 12 GB | 16 GB |

| CoWoS HBM2 Stacked Memory Bandwidth | 732 GB/s or 549 GB/s | 732 GB/s |

| Enhanced Programmability with Page Migration Engine | ||

| ECC Protection for Reliability | ||

| Server-Optimized for Data Center Deployment |

The World's Fastest GPU Accelerators for HPC and

Deep Learning.

Find an NVIDIA Accelerated Computing Partner through our

NVIDIA Partner Network (NPN).