Reinforcement Learning

Robot learning technique to develop adaptable and efficient robotic applications.

Nissan

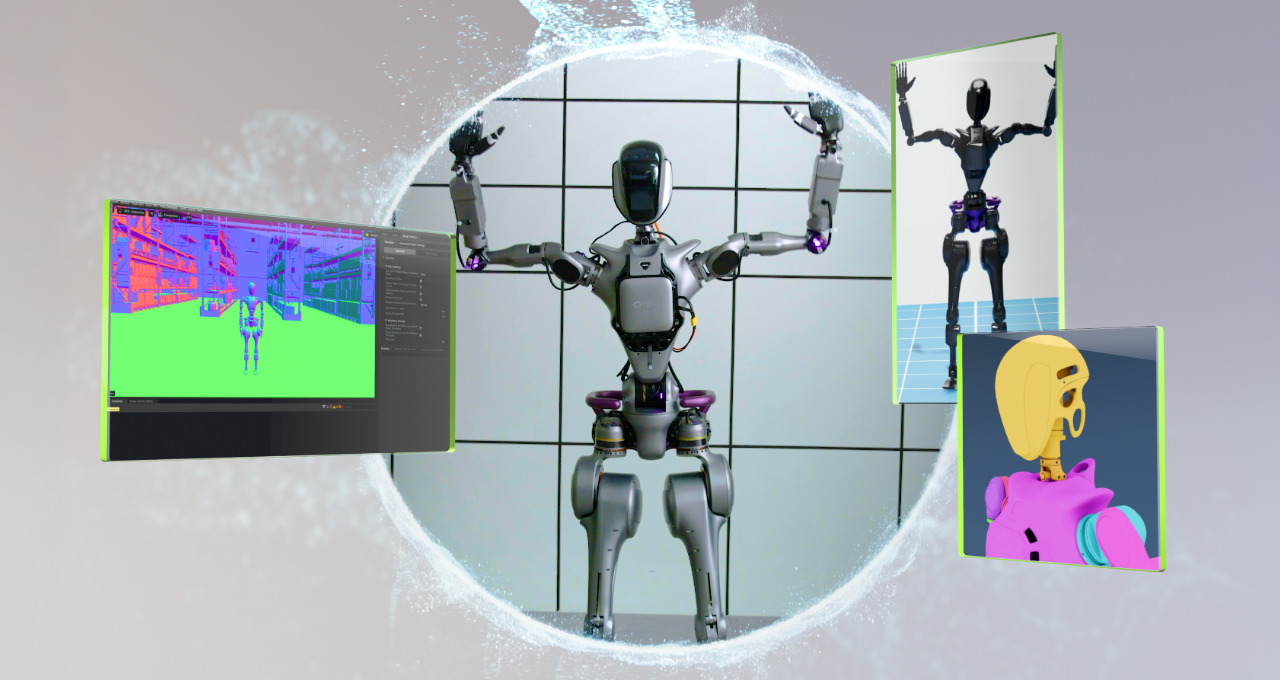

Image Credit: Agility, Apptronik, Fourier, Unitree

Workloads

Robotics

Industries

All Industries

Business Goal

Innovation

Products

NVIDIA AI Enterprise

NVIDIA Isaac GR00T

NVIDIA Isaac Lab

NVIDIA Isaac Sim

NVIDIA Omniverse

-

Overview

-

Technical Implementation

-

Partner Ecosystem

Empower Physical Robots With Complex Skills Using Reinforcement Learning

As robots undertake increasingly complex tasks, traditional programming is falling short. Reinforcement learning (RL) closes this gap by letting robots train in simulation through trial and error to enhance skills in control, path planning, and manipulation. This reward-based learning fosters continuous adaptation, allowing robots to develop sophisticated motor skills for real-world automation tasks like grasping, locomotion, and complex manipulation.

GPU-Accelerated RL Training for Robotics

Traditional CPU-based training for robot RL often requires thousands of cores for complex tasks, which drives up costs for robot applications. NVIDIA-accelerated computing addresses this challenge with parallel processing capabilities that significantly accelerate the processing of sensory data in perception-enabled reinforcement learning environments. This enhances a robots' capabilities to learn, adapt, and perform complex tasks in dynamic situations.

NVIDIA accelerated computing platforms—including robot training frameworks like NVIDIA Isaac™ Lab—take advantage of GPU power for both physics simulations and reward calculations within the RL pipeline. This eliminates bottlenecks and streamlines the process, facilitating a smoother transition from simulation to real-world deployment.

Quick Links