Cluster analysis is the grouping of objects based on their characteristics such that there is high intra-cluster similarity and low inter-cluster similarity.

Cluster Analysis

What is Clustering?

Cluster analysis is the grouping of objects such that objects in the same cluster are more similar to each other than they are to objects in another cluster. The classification into clusters is done using criteria such as smallest distances, density of data points, graphs, or various statistical distributions. Cluster analysis has wide applicability, including in unsupervised machine learning, data mining, statistics, Graph Analytics, image processing, and numerous physical and social science applications.

Why Cluster Analysis?

Data scientists and others use clustering to gain important insights from data by observing what groups (or clusters) the data points fall into when they apply a clustering algorithm to the data. By definition, unsupervised learning is a type of machine learning that searches for patterns in a data set with no pre-existing labels and a minimum of human intervention. Clustering can also be used for anomaly detection to find data points that are not part of any cluster, or outliers.

Clustering is used to identify groups of similar objects in datasets with two or more variable quantities. In practice, this data may be collected from marketing, biomedical, or geospatial databases, among many other places.

How Is Cluster Analysis Done?

It’s important to note that analysis of clusters is not the job of a single algorithm. Rather, various algorithms usually undertake the broader task of analysis, each often being significantly different from others. Ideally, a clustering algorithm creates clusters where intra-cluster similarity is very high, meaning the data inside the cluster is very similar to one another. Also, the algorithm should create clusters where the inter-cluster similarity is much less, meaning each cluster contains information that’s as dissimilar to other clusters as possible.

There are many clustering algorithms, simply because there are many notions of what a cluster should be or how it should be defined. In fact, there are more than 100 clustering algorithms that have been published to date. They represent a powerful technique for machine learning on unsupervised data. An algorithm built and designed for a specific type of cluster model will usually fail when set to work on a data set containing a very different kind of cluster model.

The common thread in all clustering algorithms is a group of data objects. But data scientists and programmers use differing cluster models, with each model requiring a different algorithm. Clusterings or sets of clusters are often distinguished as either hard clustering where each object belongs to a cluster or not, or soft clustering where each object belongs to each cluster to some degree.

This is all apart from so-called server clustering, which generally refers to a group of servers working together to provide users with higher availability and to reduce downtime as one server takes over when another fails temporarily.

Clustering analysis methods include:

- K-Means finds clusters by minimizing the mean distance between geometric points.

- DBSCAN uses density-based spatial clustering.

- Spectral clustering is a similarity graph-based algorithm that models the nearest-neighbor relationships between data points as an undirected graph.

- Hierarchical clustering groups data into a multilevel hierarchy tree of related graphs starting from a finest level (original) and proceeding to a coarsest level.

Clustering use cases

With the growing number of clustering algorithms available, it isn’t surprising that clustering has become a staple methodology across a range of business and organizational types, with varying use cases. Clustering use cases include biological sequence analysis, human genetic clustering, medical image tissue clustering, market or customer segmentation, social network or search result grouping for recommendations, computer network anomaly detection, natural language processing for text grouping, crime cluster analysis, and climate cluster analysis. Below is a description of some examples.

- Network traffic classification. Organizations seek various ways of understanding the different types of traffic entering their websites, particularly what is spam and what traffic is coming from bots. Clustering is used to group together common characteristics of traffic sources, then create clusters to classify and differentiate the traffic types. This allows more reliable traffic blocking while enabling better insights into driving traffic growth from desired sources.

- Marketing and sales. Marketing success means targeting the right people or prospects in the right way. Clustering algorithms group together people with similar traits, perhaps based on their likelihood to purchase. With these groups or clusters defined, test marketing across them becomes more effective, helping to refine messaging to reach them.

- Document analysis. Any organization dealing with high volumes of documents will benefit by being able to organize them effectively and quickly as they’re generated. That means being able to understand underlying themes in the documents, and then being able to compare that to other documents. Clustering algorithms examine text in documents, then group them into clusters of different themes. That way they can be speedily organized according to actual content.

Data scientists and clustering

As noted, clustering is a method of unsupervised machine learning. Machine learning can process huge data volumes, allowing data scientists to spend their time analyzing the processed data and models to gain actionable insights. Data scientists use clustering analysis to gain some valuable insights from our data by seeing what groups the data points fall into when they apply a clustering algorithm.

Accelerating Cluster and Graph Analytics with GPUs

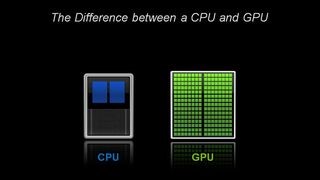

Cluster analysis plays a critical role in a wide variety of applications, but it’s now facing the computational challenge due to the continuously increasing data volume. Parallel computing with GPUs is one of the most promising solutions to overcoming the computational challenge.

GPUs provide a great way to accelerate data-intensive analytics and graph analytics in particular, because of the massive degree of parallelism and the memory access-bandwidth advantages. A GPU’s massively parallel architecture, consisting of thousands of small cores designed for handling multiple tasks simultaneously, is well suited for the computational task of “for every X do Y”. This can apply to sets of vertices or edges within a large graph.

Cluster analysis is a problem with significant parallelism and can be accelerated by using GPUs. The NVIDIA Graph Analytics library (nvGRAPH) will provide both spectral and hierarchical clustering/partitioning techniques based on the minimum balanced cut metric in the future. The nvGRAPH library is freely available as part of the NVIDIA® CUDA® Toolkit. For more information about graphs, please refer to the Graph Analytics page.

GPU-Accelerated, End-to-End Data Science

The NVIDIA RAPIDS™ suite of open-source software libraries, built on CUDA-X AI™, provides the ability to execute end-to-end data science and analytics pipelines entirely on GPUs. It relies on NVIDIA CUDA® primitives for low-level compute optimization, but exposes that GPU parallelism and high-bandwidth memory speed through user-friendly Python interfaces.

RAPIDS’s cuML machine learning algorithms and mathematical primitives follow the familiar scikit-learn-like API. Popular algorithms like K-means, XGBoost, and many others are supported for both single-GPU and large data center deployments. For large datasets, these GPU-based implementations can complete 10-50X faster than their CPU equivalents.

With the RAPIDS GPU DataFrame, data can be loaded onto GPUs using a Pandas-like interface, and then used for various connected machine learning and graph analytics algorithms without ever leaving the GPU. This level of interoperability is made possible through libraries like Apache Arrow. This allows acceleration for end-to-end pipelines—from data prep to machine learning to deep learning.

RAPIDS cuGraph seamlessly integrates into the RAPIDS data science ecosystem to enable data scientists to easily call graph algorithms using data stored in a GPU DataFrame.

RAPIDS also supports device memory sharing between many popular data science libraries. This keeps data on the GPU and avoids costly copying back and forth to host memory.

Next Steps

Find out about :

- Combining Speed & Scale to Accelerate K-Means in RAPIDS cuML

- Accelerating Single-Cell Genomic Analysis using RAPIDS

- Cluster Analysis

- Applications of Graph Algorithms or Graph Analytics

- Building an Accelerated Data Science Ecosystem: RAPIDS Hits Two Years

- GPU-Accelerated Data Science with RAPIDS | NVIDIA

- RAPIDS Accelerates Data Science End-to-End

- NVIDIA Developer Blog | Technical content: For developers, by developers