A convolutional neural network is a type of deep learning network used primarily to identify and classify images and to recognize objects within images.

Convolutional Neural Network

How Does a Convolutional Neural Network Work?

An artificial neural network is a system of hardware and/or software patterned after the way neurons operate in the human brain. Convolutional neural networks (CNNs) apply a variation of multilayer perceptrons (algorithms that classify visual inputs), usually across multiple convolutional layers that are either entirely connected or pooled.

CNNs learn in the same way humans do. People are born without knowing what a cat or a bird looks like. As we mature, we learn that certain shapes and colors correspond to elements that collectively correspond to an element. Once we learn what paws and beaks look like, we’re better able to differentiate between a cat and a bird.

Neural networks essentially work the same way. By processing training sets of labeled images, the machine is able to learn to identify elements that are characteristic of objects within the images.

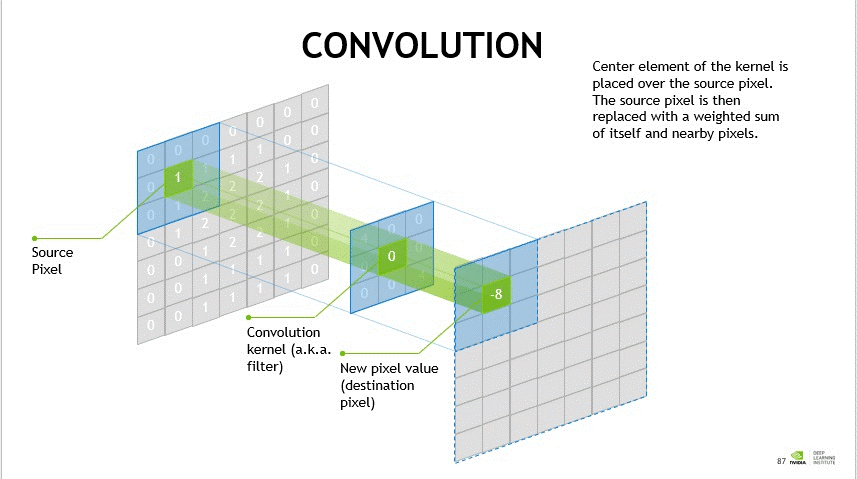

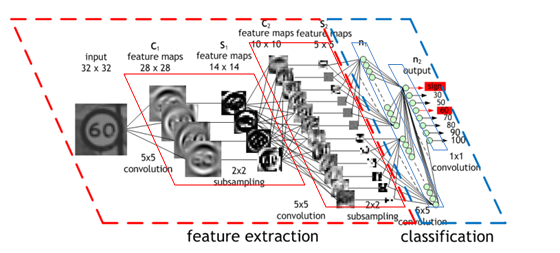

A CNN is one of the most popular types of deep learning algorithms. Convolution is the simple application of a filter to an input that results in an activation represented as a numerical value. By repeatedly applying the same filter to an image, a map of activations called a feature map is produced. This indicates the locations and strengths of detected features.

A convolution is a linear operation that involves multiplying a set of weights with the input to yield a two-dimensional array of weights called a filter. If the filter is tuned to detect a specific type of feature in the input, then the repetitive use of that filter across the entire input image can discover that feature anywhere in the image.

For example, one filter may be designed to detect curves of a certain shape, another to detect vertical lines, and a third to detect horizontal lines. Other filters may detect colors, edges, and degrees of light intensity. Connecting the output of multiple filters can reveal complex shapes that matched known elements in the training data.

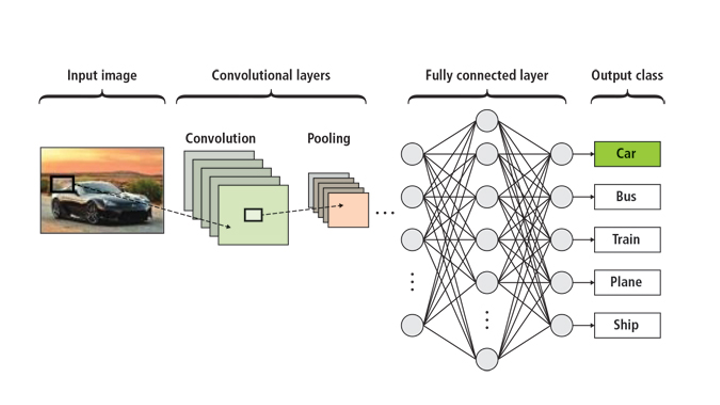

A CNN usually consists of three layers: 1) an input layer, 2) an output layer, and 3) a hidden layer that includes multiple convolutional layers. Within the hidden layers are pooling layers, fully connected layers, and normalization layers.

The first layer is typically devoted to capturing basic features such as edges, color, gradient orientation, and basic geometric shapes. As layers are added, the model fills in high-level features that progressively determine that a large brown blob first is a vehicle, then a car, and then a Buick.

The pooling layer progressively reduces the spatial size of the representation for more efficient computation. It operates on each feature map independently. A common approach used in pooling is max pooling, in which the maximum value of an array is captured, reducing the number of values needed for calculation. Stacking convolutional layers allows the input to be decomposed into its fundamental elements.

Normalization layers regularize the data to improve the performance and stability of neural networks. Normalization makes the inputs of each layer more manageable by converting all inputs to a mean of zero and a variance of one.

Fully connected layers are used to connect every neuron in one layer to all the neurons in another layer.

Why Convolutional Neural Networks?

There are three basic types of neural networks:

- Multilayer Perceptrons are good at classification prediction problems using labeled inputs. They’re flexible networks that can be applied to a variety of scenarios, including image recognition.

- Recurrent Neural Networks are optimized for sequence prediction problems using one or more steps as input and multiple steps as output. They’re strong at interpreting time series data but are not considered effective for image analysis.

- Convolutional Neural Networks were specifically designed to map image data to output variables. They’re particularly strong at developing internal representations of two-dimensional images that can be used to learn position and scale invariant structures. This makes them especially good at working with data that has a spatial relationship component.

CNNs have become the go-to model for many of the most advanced computer vision applications of deep learning, such as facial recognition, handwriting recognition, and text digitization. They also have applications in recommendation systems. The turning point was in 2012, when Alex Krizhevsky, who was then a graduate student at the University of Toronto, used the CNN model to win that year’s ImageNet competition by dropping the classification error record from 26% to 15%—an astounding achievement at the time.

For applications involving image processing, the CNN model has been shown to deliver the best results and the greatest computational efficiency. While it isn’t the only deep learning model that’s appropriate to this domain, it is the consensus choice and will be the focus of continuous innovation in the future.

Key Use Cases

CNNs are the image crunchers now used by machines to identify objects and today’s eyes of autonomous vehicles, oil exploration, and fusion energy research. They can also help spot diseases faster in medical imaging and save lives.

AI-driven machines of all types are becoming powered with eyes like ours, thanks to CNNs and RNNs. Much of these applications of AI are made possible by decades of advances in deep neural networks and strides in high-performance computing from GPUs to process massive amounts of data.

Why Convolutional Neural Networks Matter to You

Data Science Teams

Image recognition has a broad range of applications and needs to be a core competency of many data science teams. CNNs are an established standard that provides a baseline of skills that data science teams can learn and acquire to address current and future image processing needs.

Data Engineering Teams

Engineers who understand the training data needed for CNN processing are a step ahead of the game in supporting their organizations’ requirements. Datasets follow a prescribed format and numerous public datasets are available for engineers to learn from. This streamlines the process of getting deep learning algorithms into production.

Accelerating Convolutional Neural Networks using GPUs

State-of-the-art neural networks can have from millions to well over one billion parameters to adjust via back-propagation. They also require a large amount of training data to achieve high accuracy, meaning hundreds of thousands to millions of input samples will have to be run through both a forward and backward pass. Because neural nets are created from large numbers of identical neurons, they are highly parallel by nature. This parallelism maps naturally to GPUs, which provide a significant computation speedup over CPU-only training.

Deep learning frameworks allow researchers to create and explore Convolutional Neural Networks (CNNs) and other Deep Neural Networks (DNNs) easily, while delivering the high speed needed for both experiments and industrial deployment. The NVIDIA Deep Learning SDK accelerates widely-used deep learning frameworks such as Caffe, CNTK, TensorFlow, Theano, and Torch, as well as many other machine learning applications. The deep learning frameworks run faster on GPUs and scale across multiple GPUs within a single node. To use the frameworks with GPUs for Convolutional Neural Network training and inference processes, NVIDIA provides cuDNN and TensorRT™ respectively. cuDNN and TensorRT provide highly tuned implementations for standard routines such as convolution, pooling, normalization, and activation layers.

Click here for a step-by-step installation and usage guide. You can also find a fast C++/NVIDIA® CUDA® implementation of convolutional neural networks here.

To develop and deploy a vision model in no-time, NVIDIA offers the DeepStream SDK for vision AI developers, as well as Transfer Learning Toolkit (TLT) to create accurate and efficient AI models for a computer vision domain.

Next Steps

- Learn how a CNN detects brain hemorrhages with accuracy rivaling experts

- For a more technical deep dive: Deep Learning in a Nutshell: Core Concepts , Understanding Convolution in Deep Learning and the difference between a CNN and an RNN

- NVIDIA provides optimized software stacks to accelerate training and inference phases of the deep learning workflow. Learn more on the NVIDIA deep learning home page.

- The NVIDIA Deep Learning Institute (DLI) offers hands-on training for developers, data scientists, and researchers in AI and accelerated computing

- For developer news and resources, check out the NVIDIA developers site.