A random forest is a supervised algorithm that uses an ensemble learning method consisting of a multitude of decision trees, the output of which is the consensus of the best answer to the problem. Random Forest can be used for classification or regression.

Random Forest

What Is A Random Forest?

Random forest is a popular ensemble learning method for classification and regression.

Ensemble learning methods combine multiple machine learning (ML) algorithms to obtain a better model—the wisdom of crowds applied to data science. They’re based on the concept that a group of people with limited knowledge about a problem domain can collectively arrive at a better solution than a single person with greater knowledge.

Random forest is an ensemble of decision trees, a problem-solving metaphor that’s familiar to nearly everyone. Decision trees arrive at an answer by asking a series of true/false questions about elements in a data set. In the example below, to predict a person's income, a decision looks at variables (features) such as whether the person has a job (yes or no) and whether the person owns a house. In an algorithmic context, the machine continually searches for which feature allows the observations in a set to be split in such a way that the resulting groups are as different from each other as possible and the members of each distinct subgroup are as similar to each other as possible.

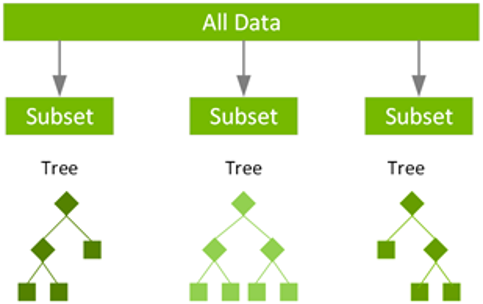

Random forest uses a technique called “bagging” to build full decision trees in parallel from random bootstrap samples of the data set and features. Whereas decision trees are based upon a fixed set of features, and often overfit, randomness is critical to the success of the forest.

Randomness ensures that individual trees have low correlations with each other, which reduces the risk of bias. The presence of a large number of trees also reduces the problem of overfitting, which occurs when a model incorporates too much “noise” in the training data and makes poor decisions as a result.

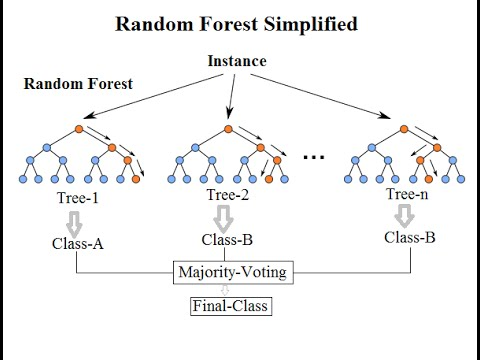

With a random forest model, the chance of making correct predictions increases with the number of uncorrelated trees in our model. The results are of higher quality because they reflect decisions reached by the majority of trees. This voting process protects the individual trees from each other by limiting errors. Even though some trees are wrong, others will be right, so the group of trees collectively moves in the correct direction. While random forest models can run slowly when many features are considered, even small models working with a limited number of features can produce very good results.

How Does A Random Forest Work?

Each tree in a random forest randomly samples subsets of the training data in a process known as bootstrap aggregating (bagging). The model is fit to these smaller data sets and the predictions are aggregated. Several instances of the same data can be used repeatedly through replacement sampling, and the result is that trees that are not only trained on different sets of data, but also different features used to make decisions.

Image source: KDNuggets

Use Cases

Examples of classification include:

- Fraud detection

- Spam detection

- Text sentiment analysis

- Predicting patient risk, sepsis, or cancer

Examples of regression include:

- Predicting the amount of fraud

- Predicting sales

Why Random Forest?

There are four principal advantages to the random forest model:

- It’s well-suited for both regression and classification problems. The output variable in regression is a sequence of numbers, such as the price of houses in a neighborhood. The output variable in a classification problem is usually a single answer, such as whether a house is likely to sell above or below the asking price.

- It handles missing values and maintains high accuracy, even when large amounts of data are missing thanks to bagging and replacement sampling.

- The algorithm makes model overfitting nearly impossible because of the “majority rules” output.

- The model can handle very large data sets with thousands of input variables, making it a good tool for dimensionality reduction.

- Its algorithms can be used to identify the most important features from the training data set.

There are also a couple of disadvantages:

- Random forests outperform decision trees, but their accuracy is lower than gradient-boosted tree ensembles such as XGBoost.

- With a large number of trees, Random forests are slower than XGBoost.

Gradient-Boosted Decision Trees

Gradient-boosting decision trees (GBDTs) are a decision tree ensemble learning algorithm similar to random forest for classification and regression. Both random forest and GBDT build a model consisting of multiple decision trees. The difference is how they’re built and combined.

GBDT uses a technique called boosting to iteratively train an ensemble of shallow decision trees, with each iteration using the residual error of the previous model to fit the next model. The final prediction is a weighted sum of all the tree predictions. Random forest bagging minimizes the variance and overfitting, while GBDT boosting reduces the bias and underfitting.

XGBoost (eXtreme Gradient Boosting) is a leading, scalable, distributed variation of GBDT. With XGBoost, trees are built in parallel instead of sequentially. XGBoost follows a level-wise strategy, scanning across gradient values and using these partial sums to evaluate the quality of splits at every possible split in the training set.

XGBoost has achieved wide popularity because of its broad range of use cases, portability, diverse language support, and cloud integration.

Comparing random forest to XGBoost, model accuracy may deteriorate based on two distinct sources of error–bias and variance:

- Gradient-boosting models combat both bias and variance by boosting for many rounds at a low learning rate

- Gradient-boosting model hyperparameters also help to combat variance.

- Random forest models combat both bias and variance using tree depth and the number of trees,

- Random forest trees may need to be much deeper than their gradient-boosting counterpart.

- More data reduces both bias and variance.

NVIDIA GPU-Accelerated Random Forest, XGBoost, and End-to-End Data Science

Architecturally, the CPU is composed of just a few cores with lots of cache memory that can handle a few software threads at a time. In contrast, a GPU is composed of hundreds of cores that can handle thousands of threads simultaneously.

The NVIDIA RAPIDS™ suite of open-source software libraries, built on CUDA-X AI, provides the ability to execute end-to-end data science and analytics pipelines entirely on GPUs. It relies on NVIDIA CUDA primitives for low-level compute optimization, but exposes that GPU parallelism and high-bandwidth memory speed through user-friendly Python interfaces.

With the RAPIDS GPU DataFrame, data can be loaded onto GPUs using a Pandas-like interface, and then used for various connected machine learning and graph analytics algorithms without ever leaving the GPU. This level of interoperability is made possible through libraries like Apache Arrow and allows acceleration for end-to-end pipelines—from data prep to machine learning to deep learning.

RAPIDS’s machine learning algorithms and mathematical primitives follow a familiar scikit-learn-like API. Popular tools like XGBoost, Random Forest, and many others are supported for both single-GPU and large data center deployments. For large datasets, these GPU-based implementations can complete 10-50X faster than their CPU equivalents.

cuML now has support for multi-node, multi-GPU random forest building. With multi-GPU support, a single NVIDIA DGX-1 can train forests 56x faster than a dual-CPU, 40-core node.

The NVIDIA RAPIDS team works closely with the DMLC XGBoost organization, and GPU-accelerated XGBoost now includes seamless, drop-in GPU acceleration, which significantly speeds up model training and improves accuracy. Tests of an XGBoost script running on a system with an NVIDIA P100 accelerator and 32 Intel Xeon E5-2698 CPU cores showed more than a four-fold speed improvement over the same test run on a non-GPU system with the same output quality. This is particularly important because data scientists typically run the XGBoost many times in order to tune parameters and find the best accuracy.

To really scale data science on GPUs, applications need to be accelerated end-to-end. cuML now brings the next evolution of support for tree-based models on GPUs, including the new Forest Inference Library (FIL). FIL is a lightweight, GPU-accelerated engine that performs inference on tree-based models, including gradient-boosted decision trees and random forests. With a single V100 GPU and two lines of Python code, users can load a saved XGBoost or LightGBM model and perform inference on new data up to 36x faster than on a dual 20-core CPU node. Building on the open-source Treelite package, the next version of FIL will add support for scikit-learn and cuML random forest models as well.

Next Steps

Find out about :

- Building an Accelerated Data Science Ecosystem: RAPIDS Hits Two Years

- GPU-Accelerated Data Science with RAPIDS | NVIDIA

- Gradient Boosting, Decision Trees, and XGBoost with CUDA

- Explaining and Accelerating Machine Learning for Loan Delinquencies

- Accelerating Random Forests up to 45X using cuML

- RAPIDS Forest Inference Library: Prediction at 100 million rows per second

- NVIDIA Developer Blog | Technical content: For developers, by developers

- Bias Variance Decompositions using XGBoost

- RAPIDS 0.9: A Model Built To Scale | by Josh Patterson | RAPIDS AI