The NGC container registry features the top software for accelerated data science, machine learning, and analytics. Tap into powerful software for executing end-to-end data science training pipelines completely in the GPU, reducing training time from days to minutes.

READY-TO-RUN DEEP LEARNING SOFTWARE

The NGC container registry provides a comprehensive catalog of GPU-accelerated AI containers that are optimized, tested and ready-to-run on supported NVIDIA GPUs on-premises and in the cloud. AI containers from NGC, including TensorFlow, PyTorch, MXNet, NVIDIA TensorRT™, and more, give users the performance and flexibility to take on their most challenging projects with the power of NVIDIA AI. This helps data scientists and researchers rapidly build, train, and deploy AI models to meet continually evolving demands.

Accelerated Machine Learning and Analytics

Three Reasons Why

PERFORMANCE-ENGINEERED AI CONTAINERS

NGC AI containers deliver record-setting performance as evidenced by industry standard AI benchmarks such as MLPerf . These results are a testament to years of relentless innovation in architecture, systems, and a fully-tuned software stack. Users can take advantage of the same optimized software stack used for these benchmarks on-premises or in the cloud with NGC containers. Each month, NVIDIA engineers work with the open source community and contribute optimizations to to a variety of AI projects, and make these optimizations available in updates to the top NGC AI containers.

ACCESS FROM ANYWHERE

The catalog of NVIDIA optimized AI software containers is available to everyone—at no cost—for use across supported platforms, from the desktop, to the data center, to the cloud. Containerized software allows for portability across environments, reducing the overhead typically required to scale AI workloads. Users now have access to a consistent, optimized set of tools that work seamlessly across NVIDIA GPUs in PCs and workstations, on servers in the data center, and in the cloud. This means users can spend less time on IT and more time experimenting, gaining insights, and driving results.INNOVATION FOR EVERY INDUSTRY

Users can quickly tap into the power of NVIDIA AI with fully optimized software containers that run on the world’s fastest GPU architecture. These provide powerful tools for tackling some of the most complex problems facing humankind. Providing early detection and finding cures for infectious diseases. Finding imperfections in critical infrastructure. Delivering deep business insights from large-scale data. Even reducing traffic fatalities. Every industry, from automotive and healthcare to fintech, is being transformed by NVIDIA AI.

DOWNLOAD THE NGC DEEP LEARNING SOFTWARE BRIEF

Learn more about the optimizations to the top deep learning software such as TensorFlow, PyTorch, MXNet, NVIDIA TensorRT ™, Theano, Caffe2, Microsoft Cognitive Toolkit (CNTK), and more, in this brief.

FREQUENTLY ASKED QUESTIONS

-

What do I get for deep learning by signing up for NGC?

You get access to a comprehensive catalog of fully integrated and optimized deep learning framework containers—all at no cost.

-

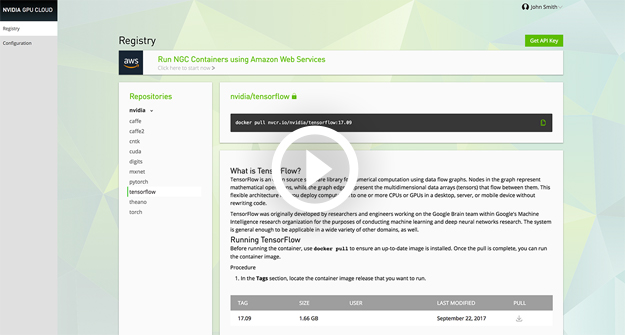

Who can access the containers on NGC?

You can access the NGC container registry either as a guest or as registered user. As a guest, you’ll be able to browse the NGC container registry and download select containers such as CUDA. By signing up as a registered user at no charge, you can download all of the containers from the NGC container registry. To browse as a guest or sign up, visit https://ngc.nvidia.com.

-

What is in the deep learning containers?

Each container has the NVIDIA GPU Cloud Software Stack, a pre-integrated stack of GPU-accelerated software optimized for deep learning on NVIDIA GPUs. It includes a Linux OS, CUDA runtime, required libraries, and the chosen framework or application (TensorFlow, NVCaffe, NVIDIA DIGITS, etc.)—all tuned to work together immediately with no additional setup.

-

What deep learning software is available on NGC?

The NGC container registry has NVIDIA GPU-accelerated releases of the most popular frameworks: NVCaffe, Caffe2, Microsoft Cognitive Toolkit (CNTK), NVIDIA DIGITS, MXNet, PyTorch, TensorFlow, Theano, Torch, CUDA (base level container for developers), as well as NVIDIA TensorRT inference accelerator.

-

What are the supported platforms for the deep learning containers?

The GPU-accelerated deep learning containers are tuned, tested, and certified by NVIDIA to run on NVIDIA TITAN V, TITAN Xp, TITAN X (Pascal), NVIDIA Quadro GV100, GP100 and P6000, NVIDIA DGX Systems, and on supported NVIDIA GPUs on Amazon EC2, Google Cloud Platform, Microsoft Azure, and Oracle Cloud Infrastructure.

-

Can I run the deep learning containers from NGC on my PC with a GeForce® GTX 1080 Ti?

Yes, the terms of use allow the NGC deep learning containers to be used on desktop PCs running NVIDIA Volta or Pascal-powered GPUs.

-

How do I run deep learning containers from NGC on a cloud service provider such as Amazon or Google Cloud?

The deep learning containers on NGC are designed to run on NVIDIA Volta or Pascal™ powered GPUs. The cloud service providers (CSP) supported by NGC offer instance types that provide the appropriate NVIDIA GPUs for running the NGC containers. In order to run the containers, you will need to choose one of these instance types, instantiate the appropriate image file on it, and then access NGC from within that image. The steps to do this varies by CSP, but you can find step-by-step instructions for each CSP in the NVIDIA GPU Cloud documentation.

-

How often are the deep learning framework containers updated?

Monthly. The deep learning containers on NGC benefit from continuous R&D investment by NVIDIA and joint engineering with framework engineers to ensure that each deep learning framework is tuned for the fastest training possible. NVIDIA engineers continually optimize the software, delivering monthly container updates to ensure that your deep learning investment reaps greater returns over time.

-

What kind of support does NVIDIA offer for these deep learning containers?

Users get access to the NVIDIA DevTalk Developer Forum https://devtalk.nvidia.com, supported by a large community of deep learning and GPU experts from the NVIDIA customer, partner, and employee ecosystem.

-

Why is NVIDIA providing these deep learning containers?

NVIDIA is accelerating the democratization of AI by giving deep learning researchers and developers simplified access to GPU-accelerated deep learning frameworks. This makes it easy for them to run these optimized frameworks on NVIDIA GPUs in the cloud or on local systems.

-

If there’s no charge for the deep learning containers, do I pay for compute time?

There is no charge for the containers on the NGC container registry (subject to the terms of the TOU). However, each cloud service provider will have their own pricing for accelerated computing services.