Robotics Simulation

Simulate, test, and validate physical AI-based robots and multi-robotic fleets.

Fraunhofer IML

Workloads

Robotics

Simulation / Modeling / Design

Industries

Manufacturing

Smart Cities / Spaces

Retail / Consumer Packaged Goods

Healthcare and Life Sciences

Business Goal

Innovation

Products

NVIDIA Isaac Sim

NVIDIA Omniverse

Overview

What Is Robot Simulation?

Physical AI-powered robots and robot fleets need to autonomously sense, plan, and perform complex tasks in the physical world. These include transporting and manipulating objects safely and efficiently in dynamic and unpredictable environments.

To achieve this level of autonomy, a "sim-first" approach is required.

Robot simulation lets robotics developers train, simulate, and validate these advanced systems through virtual robot learning and testing. This sim-first approach also applies to multi-robot fleet testing and enables autonomous systems to understand and interact with industrial facilities based on real-time production data, sensor inputs, and reasoning. It all happens in physics-based digital representations of environments, such as warehouses and factories, prior to deployment.

Why Simulate?

Bootstrap AI Model Development

Bootstrap AI model training with synthetic data generated from digital twin environments when real-world data is limited or restricted.

Scale Your Testing

Test a single robot or a fleet of industrial robots in real time under various conditions and configurations.

Reduce Costs

Optimize robot performance and reduce the number of physical prototypes required for testing and validation.

Test Safely

Safely test potentially hazardous scenarios without risking human safety or damaging equipment.

Technical Implementation

Workflows Powered by Robotics Simulation

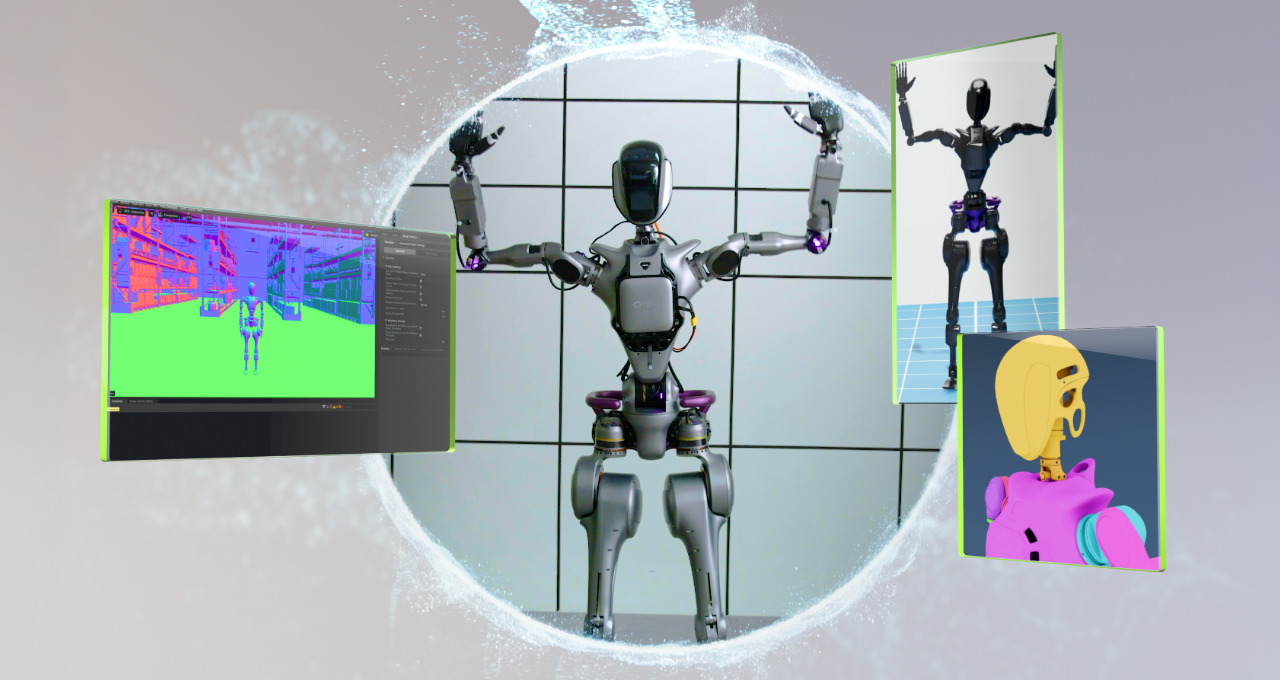

Training Robot Brains

Simulation can unlock novel use cases by bootstrapping the training of foundational and robot policy models with synthetic data generation, or SDG. This data can consist of text, 2D, or 3D images in the visual and non-visual spectra, and even motion data that can be used in conjunction with real-world data to train multimodal physical AI models.

Domain randomization is a key step in the SDG workflow, where many parameters in a scene can be changed to generate a diverse dataset—from location, to color, to textures, to lighting of the objects. Augmentation in post-processing with NVIDIA Cosmos™ world foundation models (WFM) can be used to further diversify the generated data and add the necessary realism to reduce the simulation-to-real gap.

Helping Robots Learn in a Digital Play Ground

Robot learning is critical to ensure that autonomous machines can perform robust skills repeatedly and efficiently in the physical world. High-fidelity simulation provides a virtual training ground for robots to hone their skills through trial and error or through imitation. This ensures that the robot's learned behaviors in simulation are more easily transferable to the real world.

NVIDIA Isaac™ Lab, an open-source, unified, and modular framework for robot training built on NVIDIA Isaac Sim™, simplifies common workflows in robotics, such as reinforcement learning, learning from demonstrations, and motion planning.

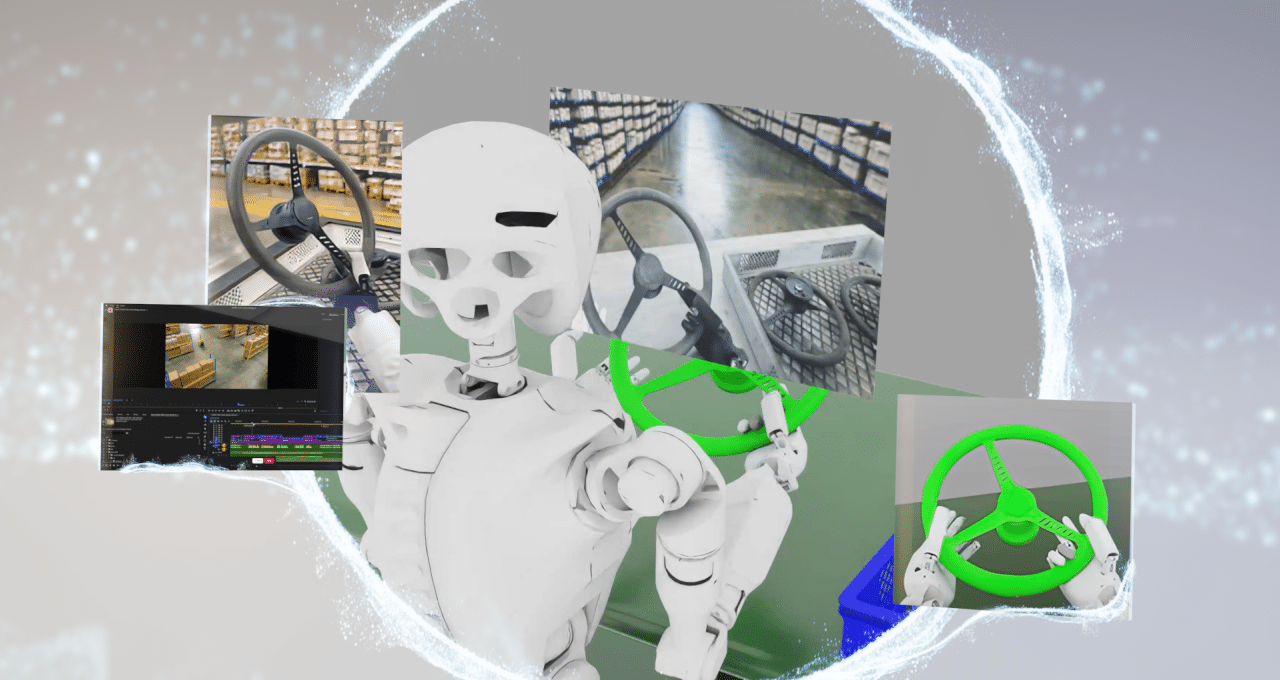

Testing and Validating Robots

Testing Single Robots

Software-in-loop (SIL) is a critical testing and validation stage in the development of software for physical AI-powered robotics systems. In SIL, the software that controls the robot is tested in a simulated environment rather than real hardware.

SIL with simulation ensures accurate modeling of real-world physics, including sensor inputs, actuator dynamics, and environmental interactions. Isaac Sim provides developers with the necessary features to test that the robot software stack behaves in the simulation as it would on the physical robot, improving the validity of the testing results.

Testing Multi-Robot Fleets

SIL can also be extended from a single robot to fleets of robots. Warehouses and other industrial facilities are highly complex logistical environments with challenges including demand fluctuations, space constraints, and workforce availability. These environments can benefit from integrating fleets of robotic systems to support operations.

Mega is an NVIDIA Omniverse™ Blueprint for developing, testing, and optimizing physical AI and robot fleets at scale in a digital twin before deployment into real-world facilities. With Mega-driven digital twins, including world simulators that coordinate all robot activities and sensor data, enterprises can continuously update robot brains for intelligent routes and tasks for operational efficiencies.

Orchestrating Robotics Workloads

Synthetic data generation, robot learning, and robot testing are highly interdependent workflows and require careful orchestration across a heterogeneous infrastructure. Robotic workflows also require developer-friendly specifications that simplify infrastructure setup, easy ways to trace data and model lineage, and a secure and streamlined way to deploy workloads.

NVIDIA OSMO provides a cloud-native orchestration platform for scaling complex, multi-stage, and multi-container robotics workloads, across on-premises, private, and public clouds.

Quick Links