Get started today with this GPU Ready Apps Guide.

MXNet is an open-source deep learning framework that allows you to define, train, and deploy deep neural networks on a wide array of devices, from cloud infrastructure to mobile devices. It is highly scalable, allowing for fast model training, and supports a flexible programming model and multiple languages.

MXNet allows you to mix symbolic and imperative programming flavors to maximize both efficiency and productivity. It is built on a dynamic dependency scheduler that automatically parallelizes both symbolic and imperative operations on the fly. A graph optimization layer on top of that makes symbolic execution fast and memory efficient.

The MXNet library is portable and lightweight. It is accelerated with the NVIDIA Pascal GPUs and it scales across multiple GPUs and on multiple nodes allowing you to train models faster.

Installation

System Requirements

You can run MXNet on Ubuntu/Debian, Amazon Linux, OS X, and Windows operating systems. MXNet can be run on Docker and on Cloud like AWS. MXNet can also be run on embedded devices, such as the Raspberry Pi running Raspbian. MXNet currently supports the Python, R, Julia and Scala languages.

This instructions is for Ubuntu/Debian users.

The GPU-enabled version of MXNet has the following requirements:

1. 64-bit Linux

2. Python 2.x / 3.x

3. NVIDIA CUDA® 7.0 or higher (CUDA 8.0 required for Pascal GPUs)

4. NVIDIA cuDNN v4.0 (minimum) or v5.1 (recommended)

You will also need an NVIDIA GPU supporting compute capability 3.0 or higher.

Download and Installation Instructions

BELOW IS A BRIEF SUMMARY OF THE COMPILATION PROCEDURE

1. Install CUDA

To use MXNet with NVIDIA GPUs, the first step is to install the CUDA Toolkit.

2. Install cuDNN

Once the CUDA Toolkit is installed, download cuDNN v5.1 Library for Linux (note that you'll need to register for the Accelerated Computing Developer Program).

Once downloaded, uncompress the files and copy them into the CUDA Toolkit directory (assumed here to be in /usr/local/cuda/)

3. Install Dependencies

$ sudo apt-get update

$ sudo apt-get install -y git build-essential libatlas-base-dev \

libopencv-dev graphviz python-pip

$ sudo pip install setuptools numpy --upgrade

$ sudo pip install graphviz jupyter

$ sudo pip install requests # for examples, e.g., mnist, cifar

4. Install MXNet

Clone the MXNet source code repository to your computer, run the installation script, and refresh the environment variables. In addition to compiling MXNet, install python MXNet module and include the path

$ git clone https://github.com/dmlc/mxnet.git --recursive

$ cd mxnet

$ cp make/config.mk .

$ echo "USE_CUDA=1" >> config.mk # to enable CUDA

$ echo "USE_CUDA_PATH=/usr/local/cuda" >> config.mk

$ echo "USE_CUDNN=1" >> config.mk

$ make –j $(nproc)

$ cd python; sudo python setup.py install; cd .. # to install MXNet python module

$ export MXNET_HOME=$(pwd)

$ echo "export PYTHONPATH=$MXNET_HOME/python:$PYTHONPATH" >> ~/.bashrc

$ source ~/.bashrc

5. Test your installation

Check if MXNet is properly installed.

$ python

Python 2.7.6 (default, Oct 26 2016, 20:30:19)

[GCC 4.8.4] on linux2

Type "help", "copyright", "credits" or "license" for more information.

>>> import mxnet as mx

>>> a = mx.nd.ones((2, 3), mx.cpu())

>>> print ((a * 2).asnumpy())

[[ 2. 2. 2.]

[ 2. 2. 2.]]

>>> a = mx.nd.ones((2, 3), mx.gpu())

>>> print ((a * 2).asnumpy())

[[ 2. 2. 2.]

[ 2. 2. 2.]]

If you don't get an import error, then MXNet is ready for python.

Training Models

Now that you have successfully installed MXNet, let’s test it with a few training examples on single and multiple GPUs.

MNIST MODEL

Image classification examples:

cd example/image-classification

Train a multilayer perception on the mnist dataset and GPU 0

$ python train_mnist.py --network mlp --gpus 0

CIFAR10 MODEL

Train a 110-layer resnet on the cifar10 dataset with batch size 128 and GPU 0 and 1

$ python train_cifar10.py --network resnet --num-layers 110 \ --batch-size 128 --gpus 0,1

INCEPTION V3

To run benchmark on imagenet networks, use --benchmark 1 as the argument to train_imagenet.py:

$ cd example/image-classification # if you are at MXNET_HOME

$ python train_imagenet.py --benchmark 1 --gpus 0,1 --network inception-v3 \ --batch-size 64 --image-shape 3,299,299 --num-epochs 10 --kv-store device

Benchmarks

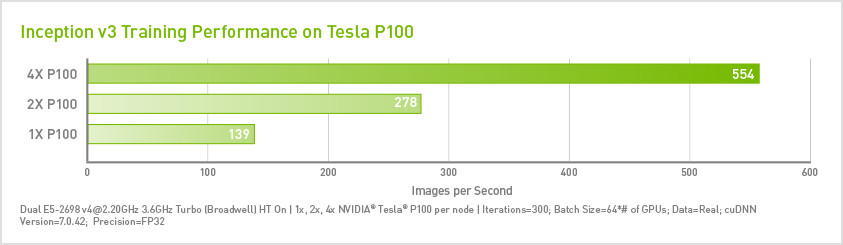

MXNET PERFORMANCE ON MULTI-GPU SYSTEM

Recommended System Configurations

Hardware Configuration

PC

Parameter

Specs

CPU Architecture

x86_64

System Memory

8-32GB

CPUs

2 CPU sockets (8+

cores, 2+ GHz ?)

cores, 2+ GHz ?)

GPU Model

NVIDIA

®TITAN

GPUs

1-4

Servers

Parameter

Specs

CPU Architecture

x86_64

System Memory

32 GB+

CPUs/Nodes

2 (8+ cores, 2+ GHz)

GPU Model

Tesla

®P100

GPUs/Node

1-4

Software Configuration

Software stack

Parameter

Version

OS

CentOS 6.2

GPU Driver

375.35 or newer

CUDA Toolkit

8.0 or newer

cuDNN Library

v5.0 or newer

cuDNN

2016.0.47