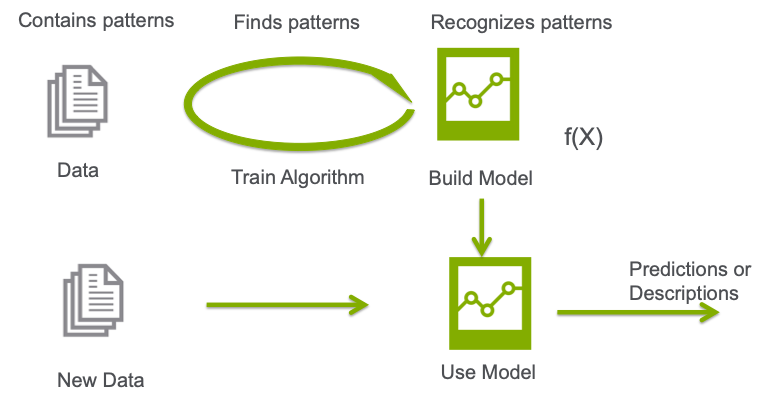

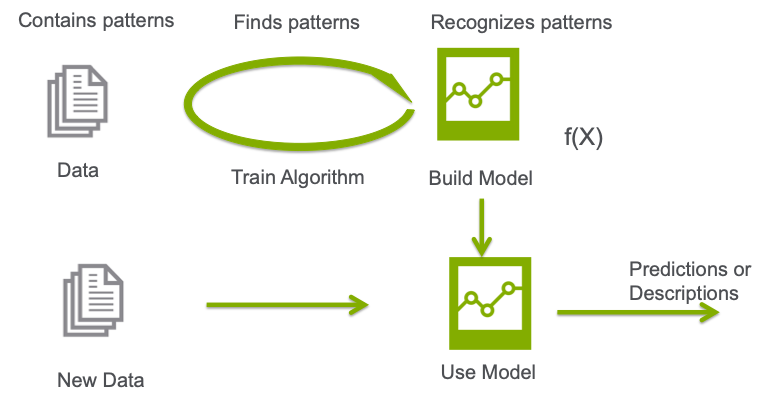

Machine learning (ML) employs algorithms and statistical models that enable computer systems to find patterns in massive amounts of data, and then uses a model that recognizes those patterns to make predictions or descriptions on new data.

Machine learning (ML) employs algorithms and statistical models that enable computer systems to find patterns in massive amounts of data, and then uses a model that recognizes those patterns to make predictions or descriptions on new data.

In simplest terms, machine learning trains a machine to learn without being explicitly programmed how to do so. As a subset of AI, machine learning in its most elemental form uses algorithms to parse data, learn from it, and then make predictions or determinations about something in the real world.

In other words, machine learning uses algorithms to autonomously create models from data fed into a machine learning platform. Typical programmed or rule-based systems capture an expert's knowledge in programmed rules, but when data is changing, these rules can become difficult to update and maintain. Machine learning has the advantage of being able to learn from increasing volumes of data fed into the algorithms, and it can give data driven probabilistic predictions. This capability to utilize and apply highly complex algorithms to today’s big data applications quickly and effectively is a relatively new development.

Just about any discrete task that can be undertaken with a data-defined pattern or with a set of rules can be automated and therefore made far more efficient using machine learning. This allows companies to transform processes only possible previously by humans, including routing of customer service calls and reviewing resumes, among many others.

The performance of a machine learning system depends on the capability of some number of algorithms for turning a data set into a model. Different algorithms are needed for different problems and tasks, and solving them depends as well on the quality of the input data and power of the computing resources.

Machine learning employs two main techniques that divide use of algorithms into different types: supervised, unsupervised, and a mix of these two. Supervised learning algorithms use labeled data, unsupervised learning algorithms find patterns in unlabeled data. Semi-supervised learning uses a mixture of labeled and unlabeled data. Reinforcement learning trains algorithms to maximize rewards based on feedback.

Supervised machine learning, also called predictive analytics, uses algorithms to train a model to find patterns in a dataset with labels and features. It then uses the trained model to predict the labels on a new dataset’s features.

Supervised learning can be further categorized into classification and regression.

Classification identifies which category an item belongs to based on labeled examples of known items. In the simple example below, logistic regression is used to estimate the probability of whether a credit card transaction is fraudulent or not (the label) based on features of transactions (transaction amount, time, and location of last transaction) known to be fraudulent or not.

Other examples of classification include:

Regression estimates the relationship between a target outcome label and one or more feature variables to predict a continuous numeric value. In the simple example below linear regression is used to estimate the house price (the label) based on the house size (the feature).

Other examples of regression include:

Supervised learning algorithms include:

Unsupervised learning, also called descriptive analytics, doesn’t have labeled data provided in advance, and can aid data scientists in finding previously unknown patterns in data. These algorithms attempt to ‘learn’ the inherent structure in the input data, discovering similarities, or regularities. Common unsupervised tasks include clustering and association.

In clustering, an algorithm classifies inputs into categories by analyzing similarities between input examples. An example of clustering is a company that wants to segment its customers in order to better tailor products and offerings. Customers could be grouped on features such as demographics and purchase histories. Clustering with unsupervised learning is often combined with supervised learning in order to get more valuable results.

Other clustering examples include:

Association or frequent pattern mining finds frequent co-occurring associations (relationships, dependencies) in large sets of data items. An example of co-occurring associations is products that are often purchased together, such as the famous beer and diaper story. An analysis of behavior of grocery shoppers discovered that men who buy diapers often also buy beer.

Unsupervised learning algorithms include:

The benefits of machine learning for business are varied and wide and include:

Accelerated computing and ML are supercharging intelligent computing for healthcare. With one platform for imaging, genomics, patient monitoring, and drug discovery—deployed anywhere, from embedded to edge to every cloud—NVIDIA Clara™ is enabling the healthcare industry to innovate and accelerate the journey to precision medicine.

Leading retailers are leveraging ML to reduce shrinkage, improve forecasting, automate warehouse logistics, determine in-store promotions and real-time pricing, enable customer personalization and recommendations, and deliver better shopping experiences—both in stores and online.

Understanding consumer behavior has never been more critical for retailers. To drive growth, intelligent recommendations are being used for personalized marketing. To improve revenue, online retailers are using GPU-powered machine learning (ML) and deep learning (DL) algorithms for faster, more accurate recommendation engines. Shoppers purchase and web action histories provide the data for a machine learning model’s analysis that yields the recommendations and supports the retailers’ upselling efforts.

Financial institutions are adopting ML to deliver smarter, securer services. GPU-powered ML solutions can identify key insights in vast amounts of data to offload routine tasks from employees with automation, accelerate risk calculations and fraud detection, and enhance customer service with more accurate recommender systems.

NVIDIA provides pre-trained models and software solutions that greatly simplify ML applications. For instance, NVIDIA's Metropolis platform enables developers to build ML applications that improve retail inventory management, enhance loss prevention efforts, and simplify the checkout experience for consumers.

As a practical example, Walmart uses NVIDIA's tech to manage employee workflow, and to ensure the freshness of meat and produce in certain stores. Likewise, BMW uses NVIDIA's edge AI solutions to automate optical inspections in its manufacturing facilities. And China Mobile, which operates the world's largest wireless network, uses NVIDIA's platform to deliver AI capabilities over 5G networks.

Companies are increasingly data-driven–sensing market and environment data, and using analytics and machine learning to recognize complex patterns, detect changes, and make predictions that directly impact the bottom line. Data-driven companies use data science to manage and make sense of torrents of data.

Data science is part of every industry. Large companies from retail, financial, healthcare, and logistics leverage data science technologies to improve their competitiveness, responsiveness, and efficiency. Advertising companies use it to target ads more effectively. Mortgage companies use it to accurately forecast default risk for maximum returns. And retailers use it to streamline their supply chains. In fact, it was the availability of open-source, large-scale data analytics and machine learning software in mid-2000s like Hadoop, NumPy, scikitlearn, Pandas, and Spark that ignited this big data revolution.

Today, data science and machine learning have become the world's largest compute segment. Modest improvements in the accuracy of predictive machine learning models can translate into billions to the bottom line. The training of predictive models is at the core of data science. In fact, the majority of IT budgets for data science are spent on building machine learning models, which includes data transformation, feature engineering, training, evaluating, and visualizing. To build the best models, data scientists need to train, evaluate, and retrain with lots of iterations. Today, these iterations take days, limiting how many can occur before deploying to production and impacting the quality of the final result.

It takes massive infrastructure to run analytics and machine learning across enterprises. Fortune 500 companies scale-out compute and invest in thousands of CPU servers to build massive data science clusters. CPU-scale out is no longer effective. While the world’s data doubles each year, CPU computing has hit a brick wall with the end of Moore’s law. GPUs have a massively parallel architecture consisting of thousands of small efficient cores designed for handling multiple tasks simultaneously. Similar to how scientific computing and deep learning have turned to NVIDIA GPU acceleration, data analytics, and machine learning will also benefit from GPU parallelization and acceleration.

NVIDIA developed RAPIDS™—an open-source data analytics and machine learning acceleration platform—for executing end-to-end data science training pipelines completely in GPUs. It relies on NVIDIA® CUDA® primitives for low-level compute optimization, but exposes that GPU parallelism and high memory bandwidth through user-friendly Python interfaces.

Data used by RAPIDS libraries is stored completely in GPU memory. These libraries access data using shared GPU memory in a data format that is optimized for analytics—Apache Arrow™. This eliminates the need for data transfer between different libraries. It also enables interoperability with standard data science software and data ingestion through the Arrow APIs. Running entire data science workflows in high-speed GPU memory and parallelizing data loading, data manipulation, and ML algorithms on GPU cores results in 50X faster end-to-end data science workflows.

Focusing on common data preparation tasks for analytics and data science, RAPIDS offers a familiar DataFrame API that integrates with scikit-learn and a variety of machine learning algorithms without paying typical serialization costs. This allows acceleration for end-to-end pipelines—from data prep to machine learning to deep learning (DL). RAPIDS also includes support for multi-node, multi-GPU deployments, enabling vastly accelerated processing and training on much larger dataset sizes.

DataFrame - cuDF - This is a GPU-accelerated dataframe-manipulation library based on Apache Arrow. It’s designed to enable data management for model training. The Python bindings of the core-accelerated, low-level CUDA C++ kernels mirror the pandas API for seamless onboarding and transition from pandas.

Machine Learning Libraries - cuML - This collection of GPU-accelerated machine learning libraries will eventually provide GPU versions of all machine learning algorithms available in scikit-learn.

Graph Analytics Libraries - cuGRAPH - This collection of graph analytics libraries seamlessly integrates into the RAPIDS data science software suite.

Deep Learning Libraries - RAPIDS provides native CUDA array_interface and DLPak support. This means data stored in Apache Arrow can be seamlessly pushed to deep learning frameworks that accept array_interface such as TensorFlow, PyTorch, and MxNet.

Visualization Libraries - RAPIDS will include tightly integrated data visualization libraries based on Apache Arrow. Native GPU in-memory data format provides high-performance, high-FPS data visualization, even with very large datasets.

As ML and DL are increasingly applied to larger datasets, Spark has become a commonly used vehicle for the data preprocessing needed to prepare raw input data for machine learning.

NVIDIA has been collaborating with the Apache Spark community to bring GPUs into Spark’s native processing. With Apache Spark 3.0 and the RAPIDS Accelerator for Apache Spark, you can now have a single pipeline—from data ingestion and data preparation to model training and tuning—on a GPU-powered cluster removing bottlenecks, increasing performance, and simplifying clusters.