Automatic speech recognition (ASR), or speech-to-text, is the combination of processes and software that decode human speech and convert it to digitized text.

Automatic Speech Recognition (ASR), or Speech-to-Text

What is Automatic Speech Recognition?

Automatic speech recognition (ASR) takes human voice as input and converts it into readable text. ASR helps us compose hands-free text messages and provides a framework for machine understanding. Human language becomes searchable and actionable, giving developers the ability to derive advanced analytics like sentiment analysis.

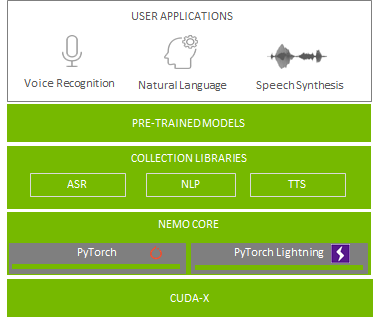

ASR is the first stage of a conversational AI application pipeline, the use of natural language to communicate with machines. A typical conversational AI application uses three subsystems to do the steps of processing and transcribing the audio—understanding (deriving meaning) of the question asked, generating the response (text), and speaking the response back to the human. These steps are achieved by multiple deep learning solutions working together. First, ASR is used to process the raw audio signal and transcribe text from it. Second, natural language processing (NLP) is used to derive meaning from the transcribed text (ASR output). Last, speech synthesis, or text-to-speech (TTS), is used for the artificial production of human speech from text. Optimizing this multi-step process is complicated, as each of these steps requires building and using one or more deep learning models.

Why ASR?

Applications in speech recognition and conversational AI are growing every day, from voice assistants and chatbots to question-answering systems that enable customer self-service. The range of industries adapting ASR or conversational AI into their solutions are wide, and have diverse domains extending from finance to healthcare. There are a wide variety of practical applications of speech-to-text:

- People in “hands-busy” professions like surgery or piloting an aircraft can take notes and issue commands while working.

- When using a keyboard is impractical or dangerous–such as while driving–users can issue spoken demands or dictate messages.

- Voice-activated telephone response systems can process complex requests without requiring users to navigate menus.

- People with disabilities who are unable to use other input means can use speech to interact with computers and other automated systems.

- Automated transcription is both faster and less expensive than transcription by human operators.

- Speech recognition is faster than typing in most cases. The average person can speak about 150 words per minute but only type about 40. Performance is even slower on cramped smart phone keypads.

- Speech-to-text is now ubiquitous in smartphones and desktop computers. Special-purpose applications are also available for medical, legal, and education disciplines. As applications become mainstream and get deployed through devices in the home, car, and office, research from academia and industry for this space has exploded.

How Does ASR work?

ASR is a challenging task in natural language, as it consists of a series of subtasks such as speech segmentation, acoustic modelling, and language modelling to form a prediction (of sequences of labels) from noisy, unsegmented input data. Deep learning has replaced traditional statistical methods for ASR—such as Hidden Markov Models and Gaussian Mixture Models—as it offers higher accuracy when identifying phonemes (the most basic sounds that are used to create speech.) The introduction of Deep learning Connectionist Temporal Classification (CTC) removed the need for pre-segmented data and allows the network to be trained end-to-end directly for sequence labelling tasks like ASR.

A typical ASR CTC pipeline involves the following steps:

- Feature extraction: The first step is to extract useful audio features from the input audio and ignore noise and other irrelevant information. Mel Frequency Cepstral Coefficient (MFCC) techniques capture audio spectral features in a spectrogram or mel spectrogram.

- Acoustic Model: Spectrograms are passed to a deep learning-based acoustic model to predict the probability of characters at each time step. During training, the acoustic model is trained on datasets (LibriSpeech ASR Corpus, Wall Street Journal, TED-LIUM Corpus, Google Audio set) consisting of hundreds of hours of audio and transcriptions in the target language. The acoustic model output can contain repeated characters based on how a word is pronounced.

- Decoding: The decoder and language model convert these characters into a sequence of words based on context. These words can be further buffered into phrases and sentences and punctuated appropriately before sending to the next stage.

- Greedy (argmax): Is the simplest strategy for a decoder. The letter with the highest probability (temporal softmax output layer) is chosen at each time-step, without regard to any semantic understanding of what was being communicated. Then, the repeated characters are removed or collapsed and blank tokens are discarded.

- A language model can be used to add context, and therefore correct mistakes in the acoustic model. A beam search decoder weights the relative probabilities of the softmax output against the likelihood of certain words appearing in context and tries to determine what was spoken by combining both what the acoustic model thinks it heard with what is a likely next word.

Accelerating ASR with Deep Learning and GPUs

Innovations like Connectionist Temporal Classification (CTC) have brought ASR squarely into the domain of deep learning. Popular deep learning models for ASR include Wav2letter, Deepspeech, LAS, and more recently, Jasper by NVIDIA Research—a popular toolkit for developing speech applications using deep learning. Kaldi is a C++ toolkit that, in addition to deep learning modules, supports traditional methods.

A GPU is composed of hundreds of cores that can handle thousands of threads in parallel. Because neural nets are created from large numbers of identical neurons, they’re highly parallel by nature. This parallelism maps naturally to GPUs, providing a significant computation speed-up over CPU-only training. For example, GPU-accelerated Kaldi solutions can perform 3500X faster than real time audio and 10X faster than CPU-only options. This performance has made GPUs the platform of choice to train deep learning models and perform inference.

Industry Applications

Healthcare

One of the difficulties facing healthcare is making it easily accessible. Calling your doctor’s office and waiting on hold is a common occurrence, a is delays connecting with a claims representative. The implementation of conversational AI to train chatbots is an emerging technology within healthcare to address the shortage of healthcare professionals and open the lines of communication with patients.

In this blog—Empowering Smart Hospitals with NVIDIA Clara Guardian from NGC and NVIDIA Fleet Command—you can read about how you can build a Virtual Patient Assistant client application that takes input queries from the patient, interprets the query by extracting intent and relevant slots, and computes a response in real-time, in a natural-sounding voice.

Financial Services

Conversational AI is building better chatbots and AI assistants for financial service firms.

Retail

Chatbot technology is also commonly used for retail applications to accurately analyze customer queries and generate responses or recommendations. This streamlines the customer journey and improves efficiencies in store operations.

NVIDIA GPU-Accelerated Conversational AI tools

Deploying a service with conversation AI can seem daunting, but NVIDIA now has tools to make this process easier, including Neural Modules (NeMo for short) and a new technology called NVIDIA Riva. To save time, pretrained ASR models, training scripts, and performance results are also available on the NGC software hub.

NVIDIA NeMo is a toolkit based on PyTorch created for developing AI applications for Conversational AI. Through modular Deep Neural Networks development, NeMo enables fast experimentation by connecting modules, mixing and matching components. NeMo modules typically represent data layers, encoders, decoders, language models, loss functions, or methods of combining activations. NeMo makes it easy to compose complex neural network architectures and systems using reusable components for each of ASR, NLP and TTS. Additionally, with NVIDIA GPU Cloud (NGC), you can find NeMo resources for conversational AI such as pre-trained models, scripts for training or evaluation, and NeMo end-to-end applications that allow developers to experiment with different algorithms and perform transfer learning using their own datasets.

To facilitate the implementation and domain adaptation of the complete ASR pipeline, NVIDIA created the Domain Specific – NeMo ASR Application. This application is developed using NeMo and lets you train or fine-tune pre-trained (acoustic and language) ASR models with your own data. This gives you the ability to progressively create better performing ASR models specifically built for your data.

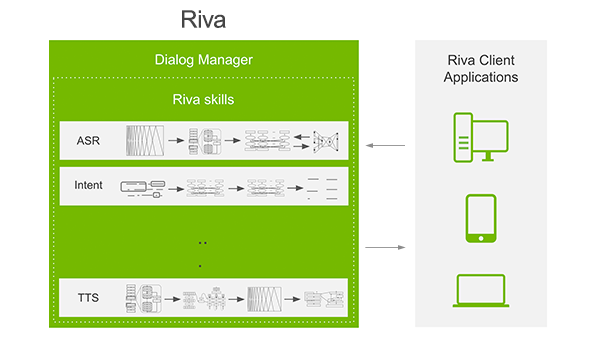

NVIDIA Riva is an application framework that provides several pipelines for accomplishing conversational AI tasks.

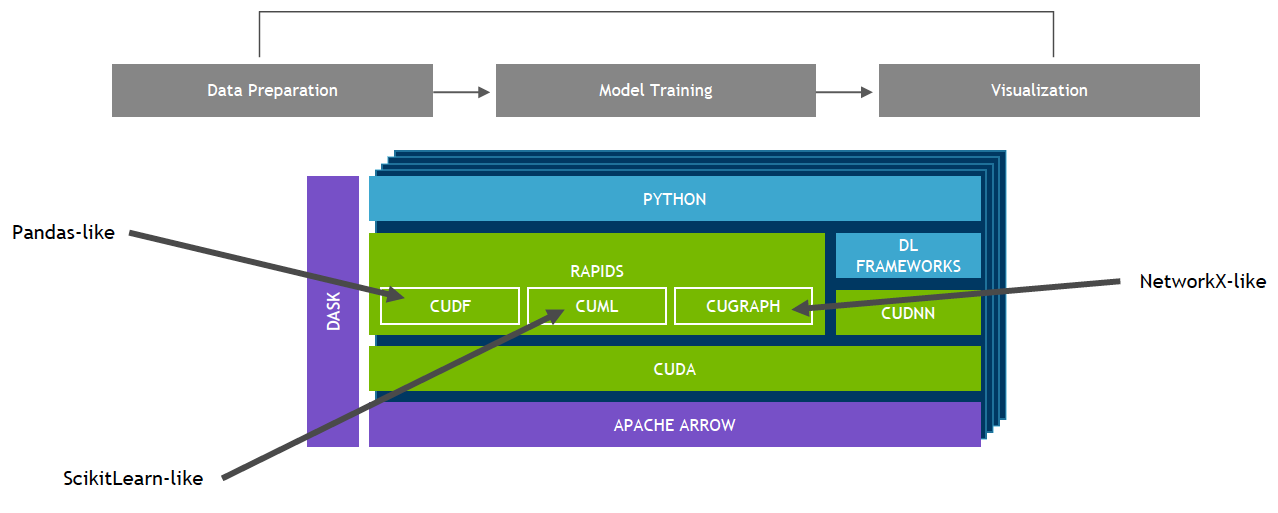

NVIDIA GPU-Accelerated, End-to-End Data Science

The NVIDIA RAPIDS™ suite of open-source software libraries, built on CUDA, gives you the ability to execute end-to-end data science and analytics pipelines entirely on GPUs, while still using familiar interfaces like Pandas and Scikit-Learn APIs.

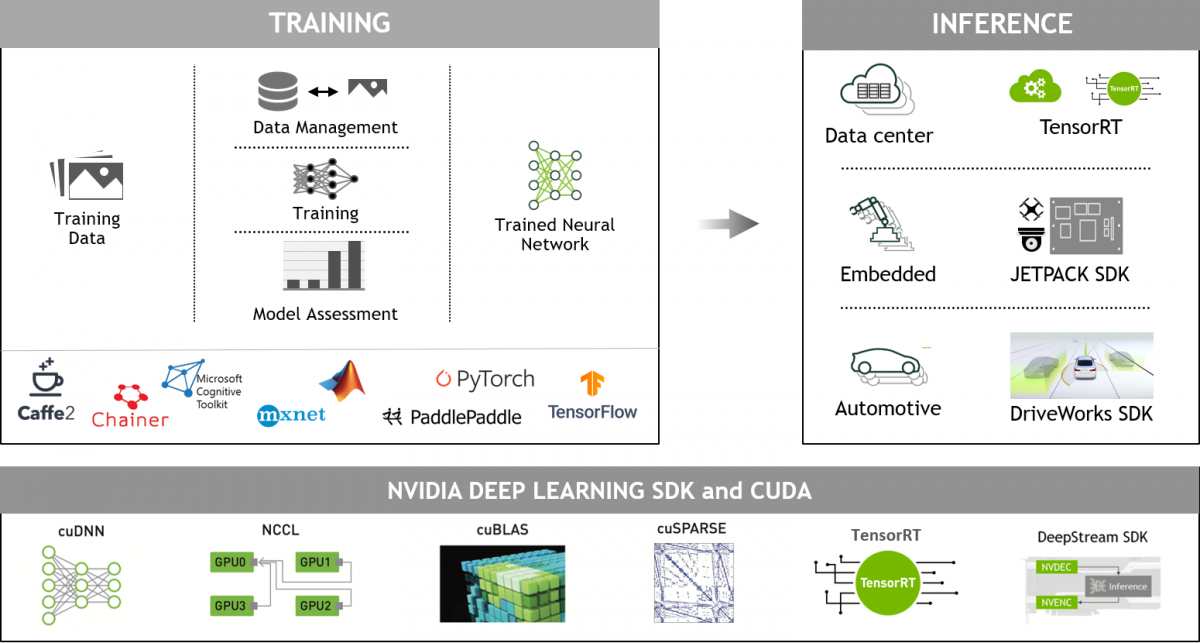

NVIDIA GPU-Accelerated Deep Learning Frameworks

GPU-accelerated deep learning frameworks offer flexibility to design and train custom deep neural networks and provide interfaces to commonly used programming languages such as Python and C/C++. Widely used deep learning frameworks such as MXNet, PyTorch, TensorFlow, and others rely on NVIDIA GPU-accelerated libraries to deliver high-performance, multi-GPU accelerated training.

Next Steps

To learn more, refer to the blogs, code samples, and webinar listed below:

- The NVIDIA Conversational AI and Conversational AI SDK web page

- How to Build Domain-Specific Automatic Speech Recognition Models on GPUs (Blog)

- Develop Speech Recognition Models with the NVIDIA NeMo Framework (Blog)

- ASR NeMo Code Sample

- Accelerating Automated Speech Recognition On-Demand Webinar

- The Domain-Specific – NeMo ASR Application is available for download as a docker container (search for nemo_asr_app_img) on NVIDIA’s container registry and software hub, NGC. Additionally, multiple pretrained ASR models are available in NGC.

- NVIDIA Releases New ASR Model and Speech Toolkit at Interspeech 2019

- GPU-Accelerated Speech to Text with Kaldi: A Tutorial on Getting Started

- Introducing Riva: Framework for GPU-Accelerated Conversational AI Applications

- Empowering Smart Hospitals with NVIDIA Clara Guardian from NGC

Find out more:

- GPU-accelerated data centers can deliver unprecedented performance with fewer servers, less floor space, and reduced power consumption. The NVIDIA GPU Cloud (NGC) provides extensive software libraries at no cost, as well as tools for building high-performance computing environments that take full advantage of GPUs.

- The NVIDIA Deep Learning Institute offers instructor-led, hands-on training on the fundamental tools and techniques for building Transformer-based natural language processing models for text classification tasks, such as categorizing documents.