NVIDIA DLSS In Deliver Us The Moon

With the Turing architecture, we set out to change gaming with two big leaps in graphics: real-time ray tracing, and AI accelerated graphics. Ray tracing provides the next generation of visual fidelity, and our first use of real-time AI, DLSS, boosts performance so you can enjoy that fidelity at higher frame rates.

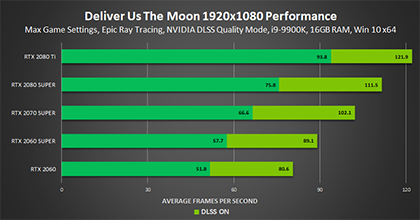

As an AI algorithm, DLSS is constantly learning and improving. Deliver Us The Moon demonstrates this continuous improvement, with DLSS delivering a 1.6x+ boost in frame rate while providing comparable image quality to native resolution with Temporal Anti-Aliasing (TAA).

This means that gamers can play at higher output resolutions and frame rates with ray tracing enabled - the GeForce RTX 2060 delivers 80 FPS at 1080p with RT and DLSS, and GeForce RTX 2080 Ti owners can enjoy the definitive 4K 60 FPS ray-traced experience.

Please set YouTube to 1080p60, instead of “Auto”, to see the video as intended

How DLSS Works

A deep neural network is trained on tens of thousands of high-resolution beautiful images, rendered offline in a supercomputer at very low frame rates and 64 samples per pixel. Based on knowledge from countless hours of training, the network can then take lower-resolution from multiple frames as input and construct high-resolution, beautiful images.

Turing’s Tensor Cores, with up to 110 teraflops of dedicated AI horsepower, make it possible for the first time to run a deep learning network on games in real time. The result is a big performance gain and sharp video quality, while minimizing ringing and temporal artifacts like sparkling.

DLSS avoids the typical tradeoffs gamers are forced to make between image quality and performance. With traditional resolution scaling algorithms, fewer pixels are rendered for faster frame rates. This results in pixelated or blurry images. Adding sharpening algorithms can help, but these often miss fine details and introduce temporal instability or noise. DLSS provides faster performance and near-native image quality by using AI to reconstruct the details of a higher resolution image and stabilize it across frames.

DLSS in Deliver Us The Moon

Deliver Us The Moon brings new DLSS modes: Quality, Balanced, and Performance. These options control the DLSS rendering resolution, allowing you to choose the right balance of image quality and performance. For 1920x1080 and 2560x1440, the default is set to Quality, with 4K set to Performance.

The performance of our AI network has continued to improve over the last year, and as a result DLSS in Deliver Us The Moon features great scaling across all GeForce RTX GPUs and resolutions. In fact, performance measurements show big gains for the entire GPU line-up:

Most importantly, DLSS achieves this performance while keeping image quality comparable to native resolutions with TAA. Let’s look at some examples.

DLSS maintains the crucial line work on the pillars and railings, the specular lights, the subtle gradients, and all the details. Here, it strengthens the horizontal catwalk railing, which looks a bit more aliased in the native image with TAA. Images captured at 1080p using DLSS ‘Quality’ Mode

In some cases, you may even see more image detail:

DLSS doesn’t just maintain image quality and boost performance—here, it stabilizes the intricate cyclone fencing. Images captured at 1080p using DLSS ‘Quality’ Mode

Because DLSS has learned from massive super sampled image sequences, much larger than you are able to play in real time, it can maintain detail that gets lost due to TAA, such as on the following console panel:

Our deep learning analyzes incredibly detailed image sequences, so sometimes you’ll see extremely fine detail that normally gets lost due to TAA. Here, the console screen has additional, miniscule text. Images captured at 1080p using DLSS ‘Quality’ Mode

Our DLSS Journey Continues

Our DLSS supercomputer and engineers have made great strides in performance and image quality over the last year, but we are not done yet. There are still several cases where DLSS has more to learn. Let’s look at two in Deliver Us The Moon:

This comparison shows roping on the railing cables with DLSS. As DLSS improves, we’ll be able to train it to better understand these patterns. Images captured at 1080p using DLSS ‘Quality’ Mode

There are slight banding patterns on DLSS during this menu screen. We’re learning how DLSS can do a better job here. Images captured at 1080p using DLSS ‘Quality’ Mode

We aim to tackle these cases, and more, as we learn and innovate with DLSS. In the meantime, we are excited by the progress made in delivering big gains in performance while keeping image quality comparable to native resolution in Deliver Us The Moon. We hope you enjoy it too.

Check out DLSS in Deliver Us The Moon and let us know what you think on the NVIDIA Forums.