RAPIDS is a suite of open-source software libraries and APIs for executing end-to-end data science and analytics pipelines entirely on GPUs, allowing for a substantial speed up, particularly on large data sets. Built on top of NVIDIA® CUDA® and UCX, the RAPIDS Accelerator for Apache Spark enables GPU-accelerated SQL/DataFrame operations and Spark shuffles with no code change.

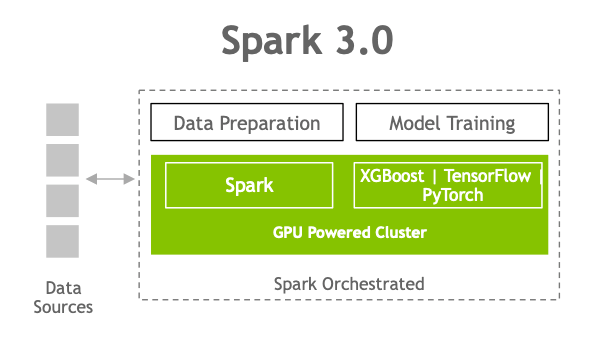

Apache Spark accelerated end-to-end AI platform stack.

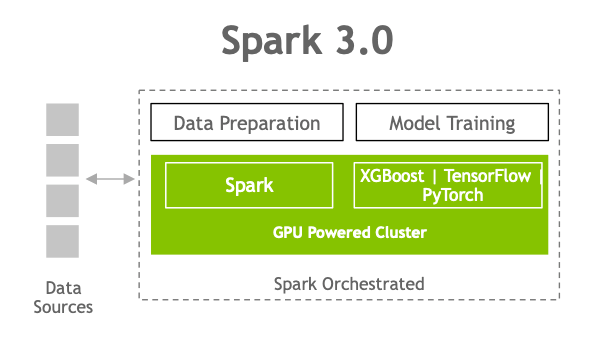

Apache Spark accelerated end-to-end AI platform stack.

Accelerated SQL/DataFrame

Spark 3.0 supports SQL optimizer plug-ins to process data using columnar batches rather than rows. Columnar data is GPU-friendly, and this feature is what the RAPIDS Accelerator plugs into to accelerate SQL and DataFrame operators. With the RAPIDS accelerator, the Catalyst query optimizer has been modified to identify operators within a query plan that can be accelerated with the RAPIDS API, mostly a one-to-one mapping, and to schedule those operators on GPUs within the Spark cluster when executing the query plan.

Accelerated Shuffle

Spark operations that sort, group, or join data by value have to move the data between partitions when creating a new DataFrame from an existing one between stages. This process is called a shuffle, and involves disk I/O, data serialization, and network I/O. The new RAPIDS Accelerator shuffle implementation uses UCX to optimize GPU data transfers, keeping as much data on the GPU as possible. It also finds the fastest path to move data between nodes by using the best of available hardware resources, including bypassing the CPU to do GPU to GPU memory intra- and inter-node transfers.

Accelerator-aware scheduling

As part of a major Spark initiative to better unify deep learning and data processing on Spark, GPUs are now a schedulable resource in Apache Spark 3.0. This allows Spark to schedule executors with a specified number of GPUs and allow users to specify how many GPUs each task requires. Spark conveys these resource requests to the underlying cluster manager, Kubernetes, YARN, or Standalone. Users can also configure a discovery script that can detect which GPUs were assigned by the cluster manager. This greatly simplifies running ML applications that need GPUs, as previously users were required to work around the lack of GPU scheduling in Spark applications.

Accelerated XGBoost

XGBoost is a scalable, distributed gradient-boosted decision tree (GBDT) ML library. It provides parallel tree boosting and is the leading ML library for regression, classification, and ranking problems. The RAPIDS team works closely with the Distributed Machine Learning Common (DMLC) XGBoost organization, and XGBoost now includes seamless, drop-in GPU acceleration. Spark 3.0 XGBoost is also now integrated with the Rapids Accelerator improving performance, accuracy and cost with GPU acceleration of Spark SQL/DataFrame operations, GPU acceleration of XGBoost training time, and efficient GPU memory utilization with in-memory optimally stored features.

In Spark 3.0, you can now have a single pipeline, from data ingest to data preparation to model training on a GPU powered cluster

NVIDIA GPU Accelerated, End-to-End Data Science

RAPIDS abstracts the complexities of accelerated data science by building on and integrating with popular analytics ecosystems like PyData and Apache Spark, enabling users to see benefits immediately. Compared to similar CPU-based implementations, RAPIDS delivers 50x performance improvements for classical data analytics and machine learning (ML) processes at scale which drastically reduces the total cost of ownership (TCO) for large data science operations.