Stream processing is the continuous processing of new data events as they’re received.

Stream Processing

What Is Stream Processing?

A stream is an unbounded sequence of events that go from producers to consumers. A lot of data is produced as a stream of events, for example financial transactions, sensor measurements, or web server logs.

Stream processing libraries, such as Streamz, help build pipelines to manage streams of continuous data, allowing applications to respond to events as they occur.

Stream processing pipelines often involve multiple actions such as filters, aggregations, counting, analytics, transformations, enrichment, branching, joining, flow control, feedback into earlier stages, back pressure, and storage.

Why Stream Processing?

The continuous processing of data streams is useful in many applications such as:

- Healthcare: continuous monitoring of instrument data

- Smart cities: traffic patterns and congestion management

- Manufacturing: optimization and predictive maintenance

- Transportation: optimized routes and fuel consumption

- Automobile: smart cars

- Cyber security, anomaly detection: web or network log processing

- Financial: stock market time series

- Machine learning: real-time predictions

- Advertising: location- or action-based advertising

The stream processing market is experiencing exponential growth with businesses relying heavily on real-time analytics, inferencing, monitoring, and more. Services built on streaming are now core components of daily business, and structured telemetry events and unstructured logs are growing at a rate of over 5X year-over-year. Big data streaming at this scale is becoming extremely complex and difficult to do efficiently in the modern business environment, where reliable, cost-effective streaming is paramount.

Accelerating Stream Processing with GPUs

NVIDIA RAPIDS™ cuStreamz is the first GPU-accelerated streaming data processing library, built with the goal to accelerate stream processing throughput and lower the total cost of ownership (TCO). Production cuStreamz pipelines at NVIDIA have saved hundreds of thousands of dollars a year. Written in Python, cuStreamz is built on top of RAPIDS, the GPU-accelerator for data science libraries. End-to-end GPU acceleration is quickly becoming the standard, as can be seen by Flink adding GPU support, and NVIDIA is excited to be part of this trend.

cuStreamz is built on:

- Streamz, an open-source Python library that helps build pipelines manage streams of continuous data;

- Dask, a robust and reliable scheduler to parallelize streaming workloads; and

- RAPIDS, a GPU-accelerated suite of libraries leveraged for streaming computations.

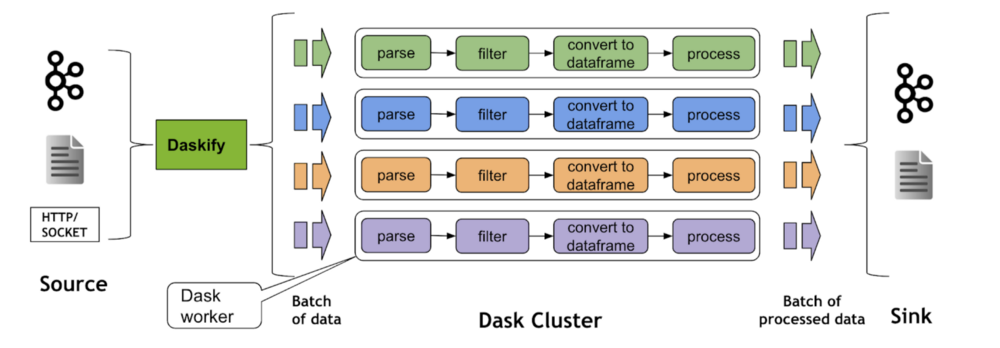

cuStreamz accelerates Streamz by leveraging RAPIDS cuDF under the hood to accelerate computations on streaming data iusing GPUs. cuStreamz also benefits from cuDF’s accelerated JSON, Parquet, and CSV readers and writers. The cuStreamz team has built an accelerated Kafka datasource connector to read data from Kafka really fast directly into cuDF dataframes, which delivers a considerable boost to end-to-end performance. Streaming pipelines can then be parallelized using Dask to run in a distributed mode for better performance at scale.

The cuStreamz architecture is summarized at a high level in the diagram below. cuStreamz is a bridge that connects Python streaming with GPUs and adds sophisticated and reliable streaming features like checkpointing and state management. cuStreamz provides the necessary building blocks to write streaming jobs that run reliably on GPUs with better performance at a lower cost.

GPU-Accelerated, End-to-End Data Science

The RAPIDS suite of open-source software libraries, built on NVIDIA® CUDA-X AI™, provides the ability to execute end-to-end data science and analytics pipelines entirely on GPUs. It relies on NVIDIA CUDA® primitives for low-level compute optimization, but exposes that GPU parallelism and high-bandwidth memory speed through user-friendly Python interfaces.

With the RAPIDS GPU DataFrame, data can be loaded onto GPUs using a Pandas-like interface, and then used for various connected machine learning and graph analytics algorithms without ever leaving the GPU. This level of interoperability is made possible through libraries like Apache Arrow. It allows acceleration for end-to-end pipelines—from data prep to machine learning to deep learning.

RAPIDS’s cuML machine learning algorithms and mathematical primitives follow the familiar scikit-learn-like API. Many popular algorithms like XGBoost are supported for both single-GPU and large data center deployments. For large datasets, these GPU-based implementations can complete 10-50X faster than their CPU equivalents.

RAPIDS supports device memory-sharing between many popular data science libraries. This keeps data on the GPU and avoids costly copying back and forth to host memory.

Next Steps

Find out about :

- Blog:cuStreamz: More Event-Stream Processing for Less with NVIDIA GPUs and RAPIDS Software

- Blog: Checkpointing Through Reference Counting in RAPIDS cuStreamz

- Blog: Running Streaming Word Count at Scale with RAPIDS and Dask on NVIDIA GPUs

- Blog: Accelerated Kafka Datasource

- Building an Accelerated Data Science Ecosystem: RAPIDS Hits Two Years

- GPU-Accelerated Data Science with RAPIDS | NVIDIA

- RAPIDS Accelerates Data Science End-to-End

- NVIDIA Developer Blog | Technical content: For developers, by developers