Sentiment analysis is the automated interpretation and classification of emotions (usually positive, negative, or neutral) from textual data such as written reviews and social media posts.

Sentiment Analysis

What is Sentiment Analysis?

Sentiment analysis is a subset of natural language processing (NLP) that uses machine learning to analyze and classify the emotional tone of text data. Basic models primarily focus on positive, negative, and neutral classification but may also account for the underlying emotions of the speaker (pleasure, anger, indignation), as well as intentions to buy.

Context adds complexity to sentiment analysis. For example, the exclamation “nothing!” has considerably different meaning depending on whether the speaker is commenting on what she does or doesn’t like about a product. In order to understand the phrase “I like it” the machine must be able to untangle the context to understand what “it” refers to. Irony and sarcasm are also challenging because the speaker may be saying something positive while meaning the opposite.

There are several types of sentiment analysis. Aspect-based sentiment analysis goes one level deeper to determine which specific features or aspects are generating positive, neutral, or negative emotion. Businesses can use this insight to identify shortcomings in products or, conversely, features that generate unexpected enthusiasm. Emotion analysis is a variation that attempts to determine the emotional intensity of a speaker around a topic. Intent analysis determines likelihood to take action.

Why Use Sentiment Analysis?

Businesses can use insights from sentiment analysis to improve their products, fine-tune marketing messages, correct misconceptions, and identify positive influencers.

Social media has revolutionized the way people make decisions about products and services. In markets like travel, hospitality, and consumer electronics, customer reviews are now considered to be at least as important as evaluations by professional reviewers. Sources like Amazon ratings and reviews on TripAdvisor, Google, and Yelp can literally make or break products. Less-structured outlets like blogs, Twitter, Facebook, and Instagram can also be useful sources of insight on customer sentiment, as well as feedback on product features and services that inspire praise or condemnation.

Manually analyzing this abundance of text produced by customers or potential customers is time-consuming. Sentiment analysis of social media, emails, support tickets, chats, product reviews, and recommendations have become a valuable resource used in almost all industry verticals. It’s very helpful in helping businesses to gain insights, understand customers, predict and enhance the customer experience, tailor marketing campaigns, and aid in decision-making.

Use Cases for Sentiment Analysis

Example use cases of sentiment analysis include:

- Product designers use sentiment analysis to determine which features are resonating with customers and thus deserve additional investment and attention. Conversely, they can learn when a product or feature is falling flat and adjust to prevent inventory from going into the bargain bin.

- Marketing organizations rely heavily on sentiment analysis to help them fine-tune messages, discover online influencers, and build positive word-of-mouth.

- Retail organizations mine sentiment to determine which products are likely to sell well and adjust inventory and promotions accordingly.

- Investors can identify new trends emerging in online conversations that foreshadow market opportunities.

- Politicians use it to sample voter attitudes on important issues.

How Does Sentiment Analysis Work?

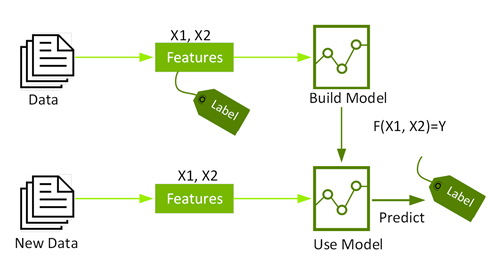

Machine Learning Feature Engineering

Feature engineering is the process of transforming raw data into inputs for a machine learning algorithm. In order to be used in machine learning algorithms, features have to be put into feature vectors, which are vectors of numbers representing the value for each feature. For sentiment analysis, textual data has to be put into word vectors, which are vectors of numbers representing the value for each word. Input text can be encoded into word vectors using counting techniques such as Bag of Words (BoW) , bag-of-ngrams, or Term Frequency/Inverse Document Frequency (TF-IDF).

Sentiment Classification Using Supervised Machine Learning.

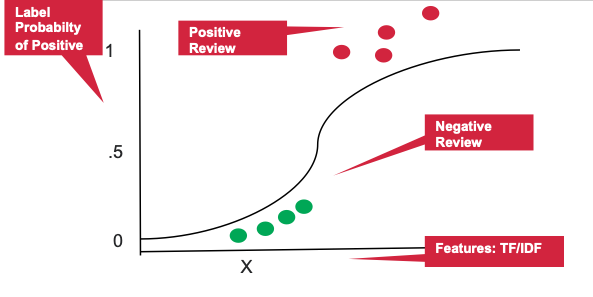

After the input text has been converted into word vectors, classification machine learning algorithms can be used to classify the sentiment. Classification is a family of supervised machine learning algorithms that identifies which category an item belongs to (such as whether a text is negative or positive) based on labeled data (such as text labeled as positive or negative).

Classification machine learning algorithms that can be used for sentiment analysis include:

- Naïve Bayes is a family of probabilistic algorithms that determines the conditional probability of the class of the input data.

- Support Vector Machines finds a hyperplane in an N-dimensional space (N ishe number of features) that distinctly classifies the data points.

- Logistic regression uses a logistic function to model the probability of a certain class.

Sentiment Analysis Using Deep Learning

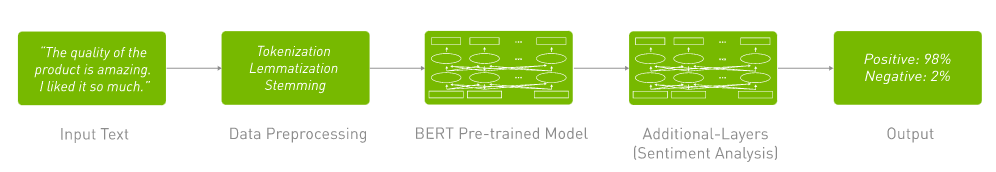

Deep learning (DL) is a subset of machine learning (ML) that uses multi-layered artificial neural networks to deliver state-of-the-art accuracy in tasks such as NLP and others. DL word embedding techniques such as Word2Vec encode words in meaningful ways by learning word associations, meaning, semantics, and syntax. DL algorithms also enable end-to-end training of NLP models without the need to hand-engineer features from raw input data.

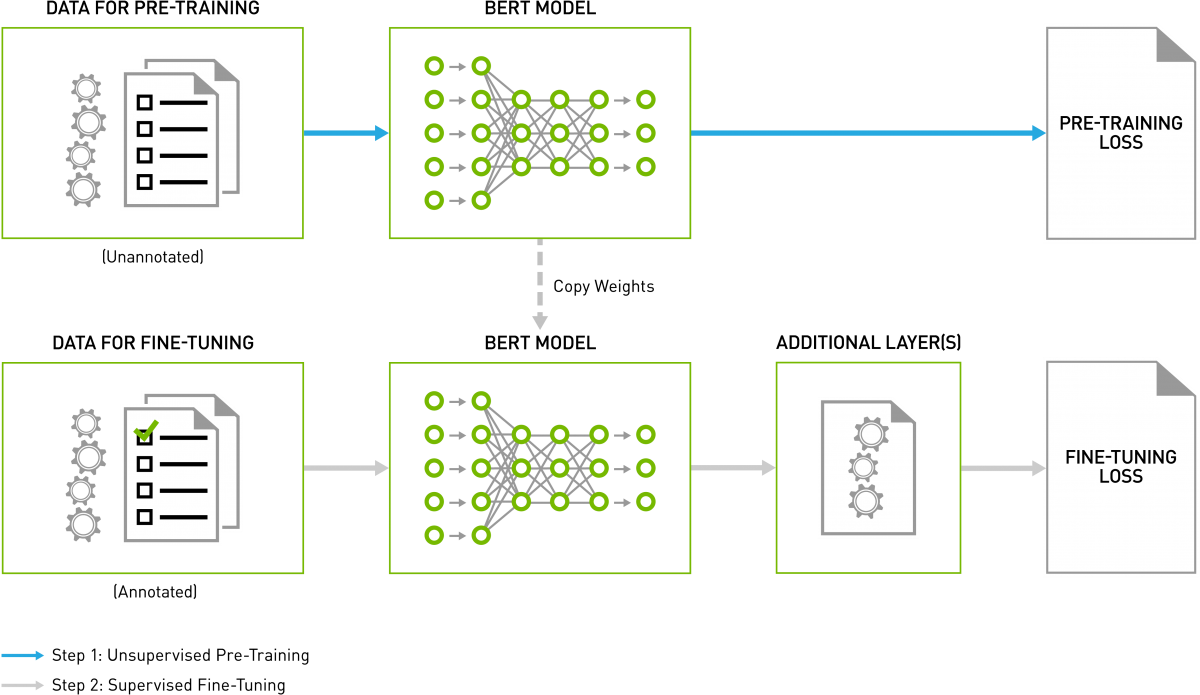

There are different variations of deep learning algorithms. Recurrent neural networks are the mathematical engines to parse language patterns and sequenced data. They’re the natural language processing brains that give ears and speech to Amazon’s Alexa and used in language translation, stock predictions, and algorithmic trading. Transformer deep learning models, such as BERT (Bidirectional Encoder Representations from Transformers), are an alternative to recurrent neural networks that apply an attention technique—parsing a sentence by focusing attention on the most relevant words that come before and after it. BERT revolutionized progress in NLP by offering accuracy comparable to human baselines on benchmarks for intent recognition, sentiment analysis, and more. It’s deeply bidirectional and can understand and retain context better than the other text encoding mechanisms. A key challenge with training language models is the lack of labeled data. BERT is trained on unsupervised tasks and generally uses unstructured datasets from books corpus, English Wikipedia, and more.

GPUs: Accelerating NLP and Sentiment Analysis

A driver of NLP growth is recent and ongoing advancements and breakthroughs in natural language processing, not the least of which is the deployment of GPUs to crunch through increasingly massive and highly complex language models.

A GPU is composed of hundreds of cores that can handle thousands of threads in parallel. GPUs have become the platform of choice to train ML and DL models and perform inference because they can deliver 10X higher performance than CPU-only platforms.

State-of-the-art Deep Learning Neural Networks can have from millions to well over one billion parameters to adjust via back-propagation. They also require a large amount of training data to achieve high accuracy, meaning hundreds of thousands to millions of input samples will have to be run through both a forward and backward pass. Because neural nets are created from large numbers of identical neurons, they’re highly parallel by nature. This parallelism maps naturally to GPUs, providing a significant computation speed-up over CPU-only training. GPUs have become the platform of choice for training large, complex Neural Network-based systems for this reason, and the parallel nature of inference operations also lend themselves well for execution on GPUs. In addition, Transformer-based deep learning models, such as BERT, don’t require sequential data to be processed in order, allowing for much more parallelization and reduced training time on GPUs than RNNs.

NVIDIA GPU-Accelerated AI Libraries

With NVIDIA GPUs and CUDA-X AI™ libraries, massive, state-of-the-art language models can be rapidly trained and optimized to run inference in just a couple of milliseconds, or thousandths of a second. This is a major stride towards ending the trade-off between an AI model that’s fast versus one that’s large and complex. The parallel processing capabilities and Tensor Core architecture of NVIDIA GPUs allow for higher throughput and scalability when working with complex language models—enabling record-setting performance for both the training and inference of BERT.

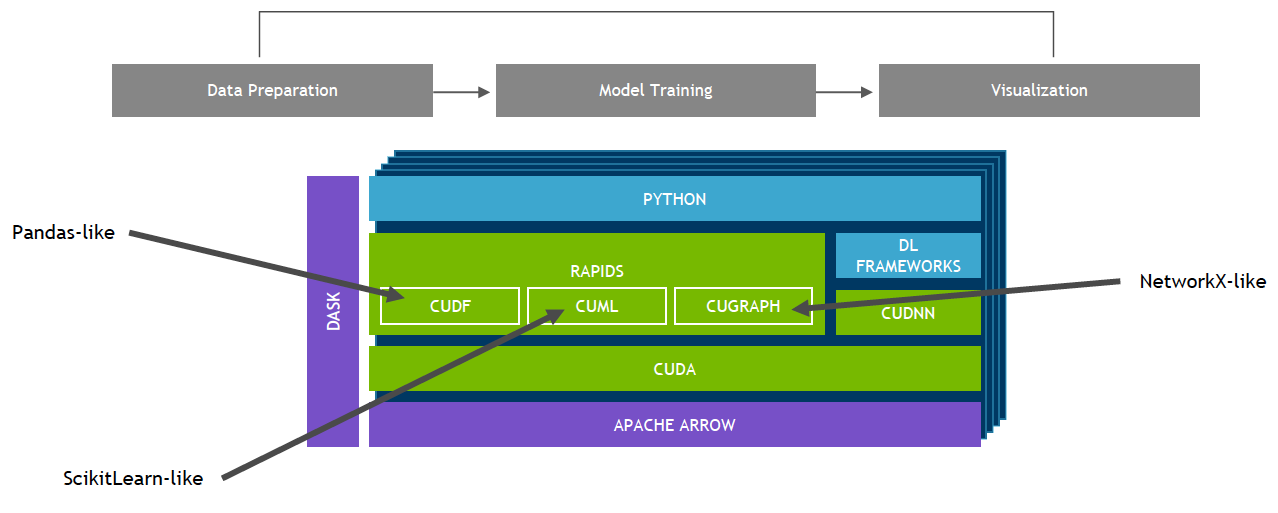

NVIDIA GPU-Accelerated, End-to-End Data Science

The NVIDIA RAPIDS™ suite of software libraries, built on CUDA-X AI, gives you the freedom to execute end-to-end data science and analytics pipelines entirely on GPUs. It relies on NVIDIA® CUDA® primitives for low-level compute optimization, but exposes that GPU parallelism and high-bandwidth memory speed through user-friendly Python interfaces.

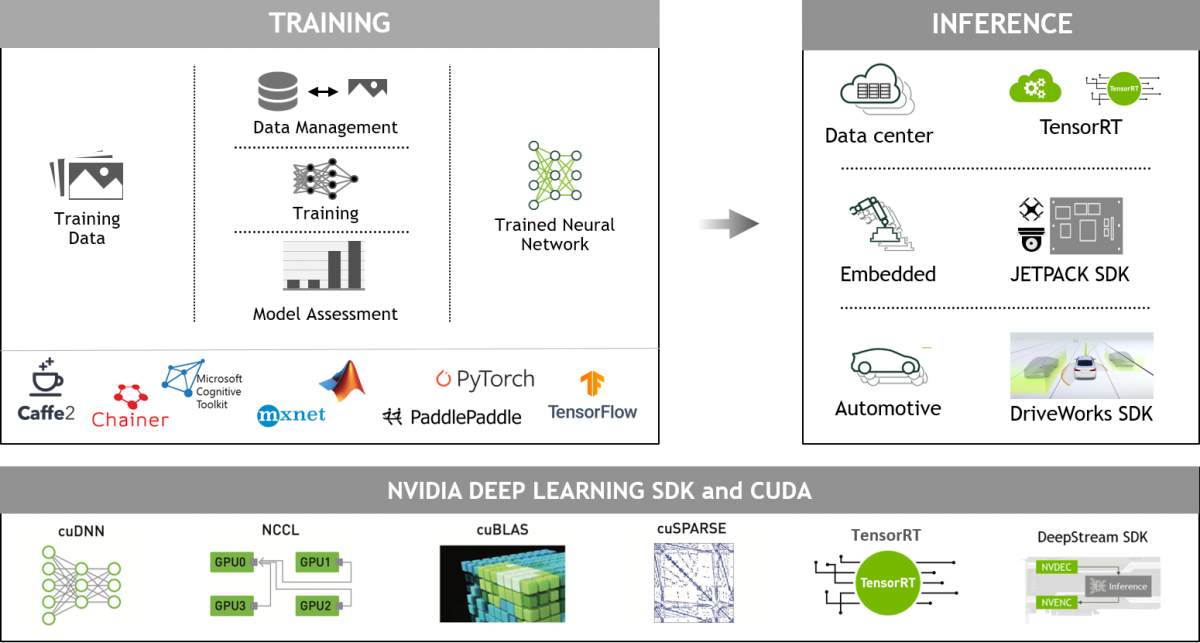

NVIDIA GPU-Accelerated Deep Learning Frameworks

GPU-accelerated DL frameworks offer flexibility to design and train custom deep neural networks and provide interfaces to commonly-used programming languages such as Python and C/C++. Widely used deep learning frameworks such as MXNet, PyTorch, TensorFlow, and others rely on NVIDIA GPU-accelerated libraries to deliver high-performance, multi-GPU accelerated training.

Next Steps

- NVIDIA provides optimized software stacks to accelerate training and inference phases of the deep learning workflow. Learn more on the NVIDIA deep learning home page.

- Developers, researchers, and data scientists can get easy access to NVIDIA optimized deep learning framework containers with deep learning examples that are performance-tuned and tested for NVIDIA GPUs. This eliminates the need to manage packages and dependencies or build deep learning frameworks from source. Visit NVIDIA NGC to learn more and get started.

- Designed specifically for deep learning, Tensor Cores on NVIDIA Volta™and Turing™ GPUs deliver significantly higher training and inference performance. Learn more about accessing reference implementations.

- The NVIDIA Deep Learning Institute (DLI) offers hands-on training for developers, data scientists, and researchers in AI and accelerated computing.

- For developer news and resources check out the NVIDIA developers site.

Find out about:

- Deep Learning NLP Interprets Words with Multiple Meanings

- Snark Bite: Like an AI Could Ever Spot Sarcasm

- Deep Learning Examples

- NLP and Text Processing with RAPIDS: Now Simpler and Faster

- The NVIDIA Conversational AI and Conversational AI SDK web page

- BERT QA in TensorFlow with NVIDIA GPUs (Blog)

- BERT Does Europe: AI Language Model Learns German, Swedish (Blog)

- NLP NeMo Code Samples

- Train BERT Model with PyTorch Code Sample