Robot Learning

Train robot policies in simulation.

Workloads

Accelerated Computing Tools & Techniques

Data Center / Cloud

Robotics

Simulation / Modeling / Design

Industries

Healthcare and Life Sciences

Manufacturing

Retail/ Consumer Packaged Goods

Smart Cities/Spaces

Business Goal

Innovation

Return on Investment

Products

NVIDIA Isaac GR00T

NVIDIA Isaac Lab

NVIDIA Isaac Sim

NVIDIA Jetson AGX

NVIDIA Omniverse

-

Overview

-

Technical Implementation

-

Ecosystem

Build Generalist Robot Policies

Preprogrammed robots operate using fixed instructions within set environments, which limits their adaptability to unexpected changes.

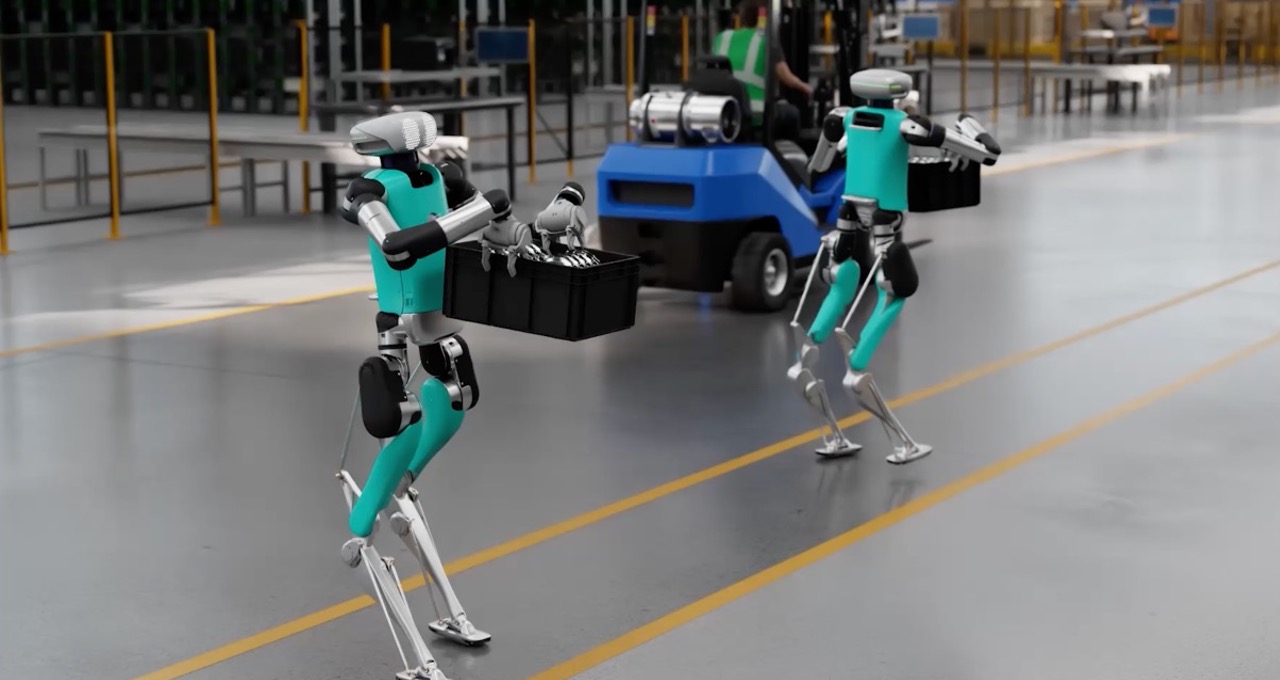

AI-driven robots address these limitations through simulation-based learning, allowing them to autonomously perceive, plan, and act in dynamic conditions. With robot learning, they can acquire and refine new skills by using learned policies—sets of behaviors for navigation, manipulation, and more—to improve their decision-making across various situations.

Benefits of Simulation-Based Robot Learning

Flexibility and Scalability

Iterate, refine, and deploy robot policies for real-world scenarios using a variety of data sources from your real robot-captured data and synthetic data in simulation. This works for any robot embodiment, such as autonomous mobile robots (AMRs), robotic arms, and humanoid robots. The “sim-first” based approach also lets you quickly train hundreds or thousands of robot instances in parallel.

Accelerated Skill Development

Train robots in simulated environments to adapt to new task variations without the need for reprogramming physical robot hardware.

Physically Accurate Environments

Easily model physical factors like object interactions (rigid or deformables), friction, etc., to significantly reduce the sim-to-real gap.

Safe Proving Environment

Test potentially hazardous scenarios without risking human safety or damaging equipment.

Reduced Costs

Avoid the burden of real-world data collection and labeling costs by generating large amounts of synthetic data, validating trained robot policies in simulation, and deploying on robots faster.

Robot Learning Algorithms

Robot learning algorithms—such as imitation learning or reinforcement learning—can help robots generalize learned skills and improve their performance in changing or novel environments. There are several learning techniques, including:

- Reinforcement learning: A trial-and-error approach in which the robot receives a reward or a penalty based on the actions it takes.

- Imitation learning: The robot can learn from human demonstrations of tasks.

- Supervised learning: The robot can be trained using labeled data to learn specific tasks.

- Diffusion policy: The robot uses generative models to create and optimize robot actions for desired outcomes.

- Self-supervised learning: When there are limited labeled datasets, robots can generate their own training labels from unlabeled data to extract meaningful information.

Quick Links